Understanding and Extending Prometheus AlertManager

Lee Calcote

Lee Calcote

clouds, containers, infrastructure, applications and their management

Show of Hands

AlertManager

Prometheus

Alertmanager is an alert...

Purpose

ingester

grouper

de-duplicator

silencer

throttler

notifier

\ˈnō-mən-ˌklā-chər

a brief Prometheus AlertManager construct review

match alerts to their receiver and how often to notify

where and how to send alerts

- Silencers - matches alerts with specific labels and prevents them from being included in notifications.

- Inhibitors - suppress specific notifications when other specific alerts are already firing.

- Grouping - categorizes alerts of similar nature into a single notification.

\ˈnō-mən-ˌklā-chər

a brief Prometheus AlertManager construct review

Muting

Suppressing

Correlating

group_wait: 30s

group_by: ['alertname', 'cluster']

group_interval: 5m

Multiple approaches to suppression

vs

vs

per route

global

via ui / api

Alerts

ALERT <alert name>

IF <PromQL vector expression>

FOR <duration>

LABELS { ... }

ANNOTATIONS { ... }

Supports clients other than Prometheus

is notified when alerts transition state

a shared construct

Prometheus

AlertManager

inactive

firing

pending

state transition

inactive

firing

notifications

!

Notification Integrations

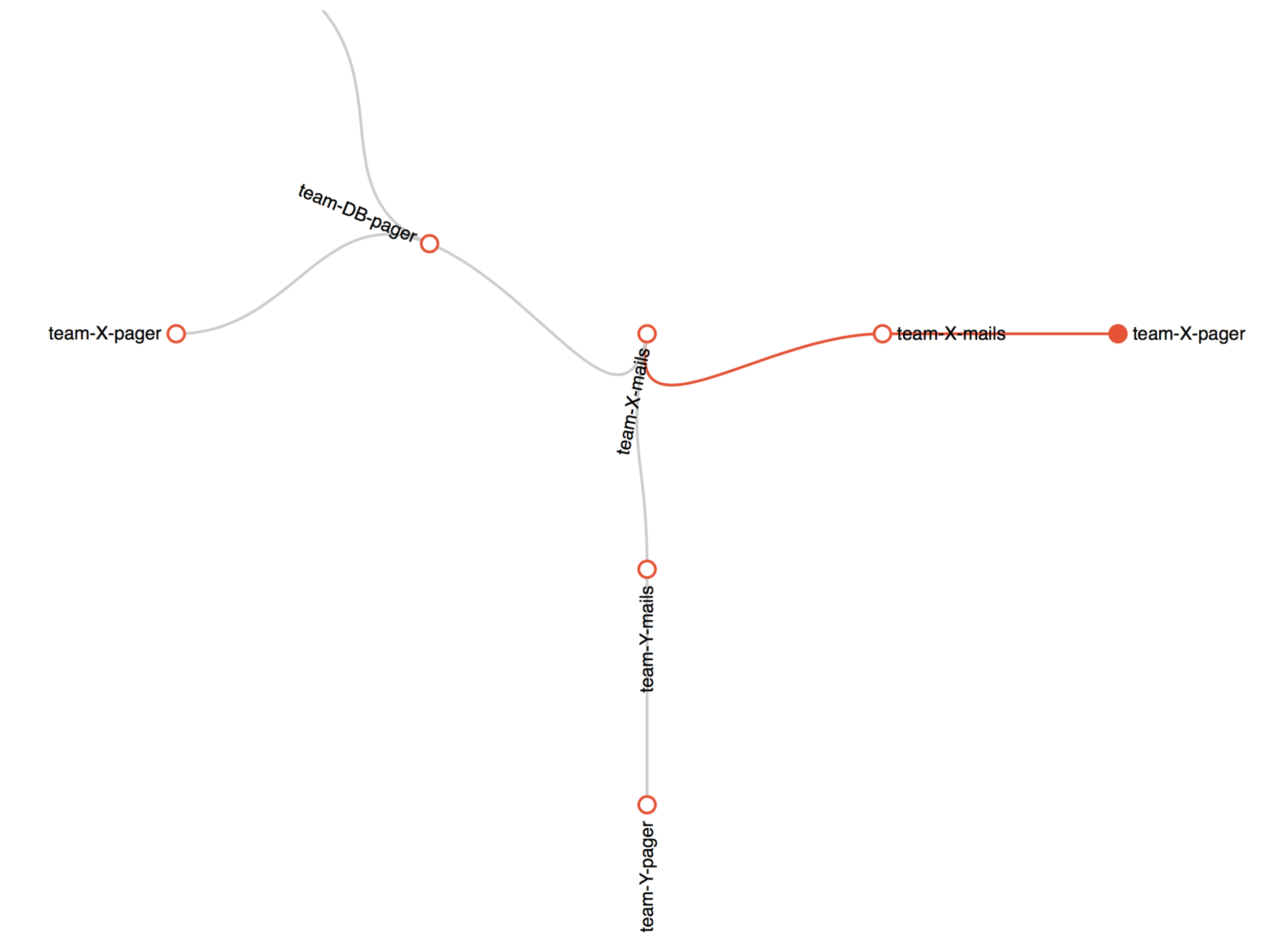

Notifying to Multiple Destinations

Use continue to advance to next receiver.

route:

receiver: email_webhook

receivers:

- name: email_webhook

email_configs:

- to: 'lee@example.io'

webhook_configs:

- url: <webhook url here>

Use a receiver that goes to both destinations.

route:

receiver: ops-team-all # default

routes:

- match:

severity: page

receiver: ops-team-b

continue: true

- match:

severity: critical

receiver: ops-team-a

receivers:

- name: ops-team-all

email_configs:

- to: ops-team-all@example.io

- name: ops-team-a

email_configs:

- to: ops-team-a@example.io

- name: ops-team-b

email_configs:

- to: ops-team-b@example.io

or

api

Inhibitor

!

de-duplication

Dispatcher

Non-HA AlertManager Architecture

Silencer

| Dispatcher sorts incoming alerts into aggregation groups and assigns the correct notifiers to each. |

Alert Provider

UI

Silence Provider

store

de-duplication

subscribe

Router

batched alerts

notification pipeline

Notify Provider

| checks for previously sent notifications |

Retry

Retry

Maintenance Script

alerts

High Availability

being introduced in 0.5

I

gossip protocols.

built atop Weave Mesh

With HA, you no longer have to monitor the monitor.

Designed for an alert to be sent to all instances in the cluster.

All Prometheus instances send alerts to all Alertmanager instances.

Guarantees notifications to be sent at least once.

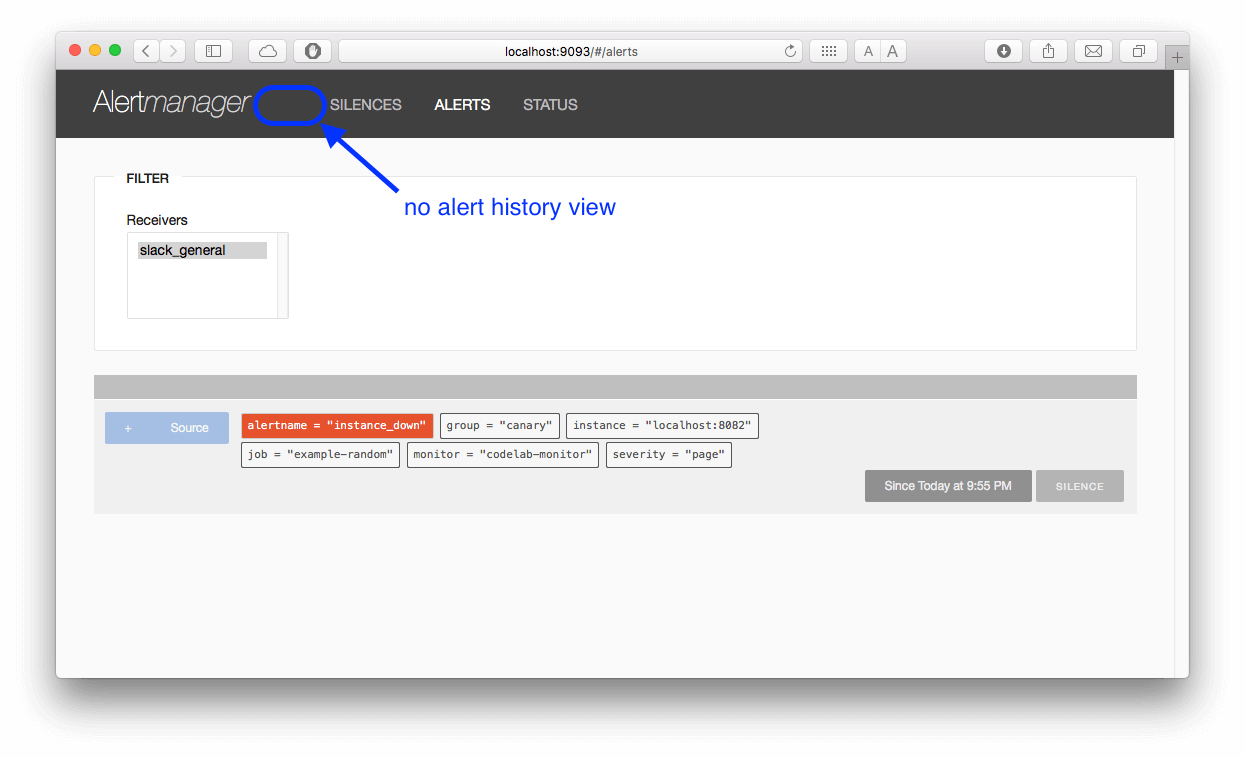

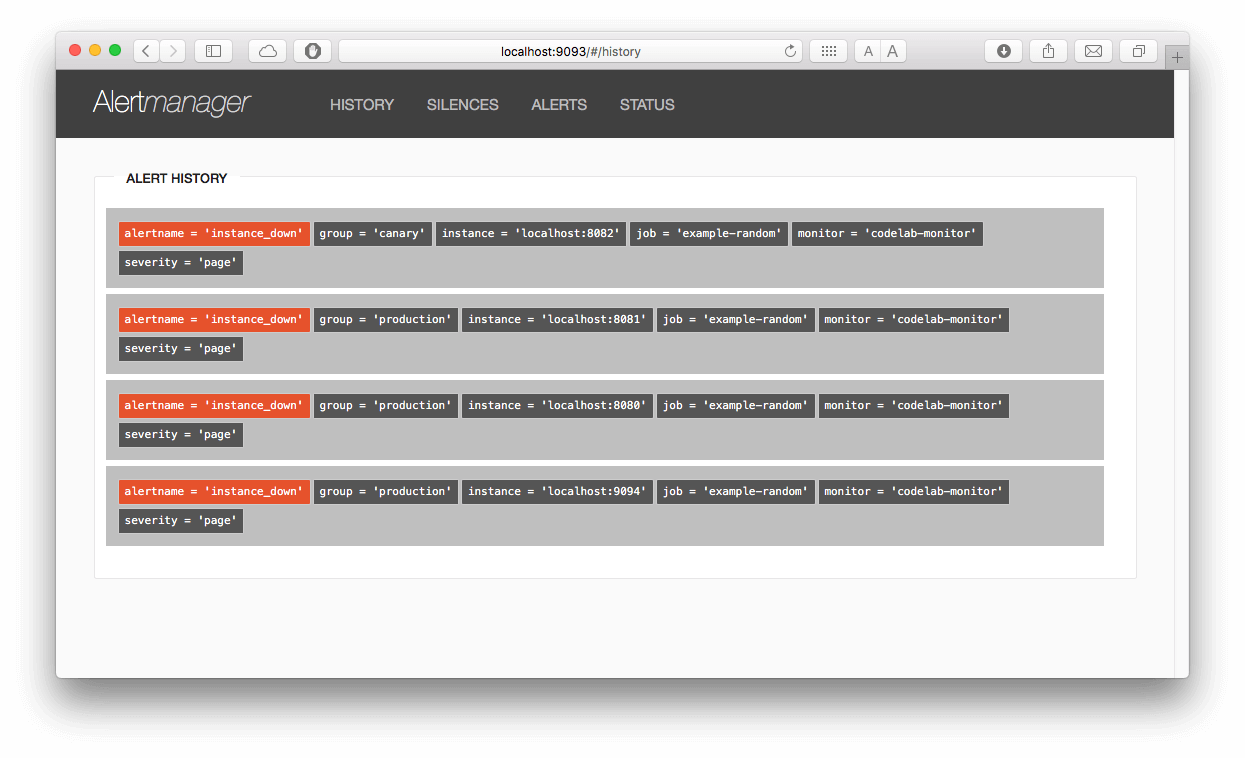

AlertManager UI

Story:

As an Operator, I would like to not only see a list of firing alerts, but also a list of all transpired alerts, so that I may have additional context as the thresholding behavior for a given defined alert.

Prologue:

Alert troubleshooting is improved when operators have a view of what is firing, has recently fired, what is normal, but also go back in time and see what fired an hour ago. U nderstanding firing order assists in root cause analysis and identify problem areas.

Limitations:

- AlertManager database (SQLite) is not intended to provide long-term storage.

Acceptance Criteria:

- Once fired, whether actively firing or not, alerts will be displayed on the History page.

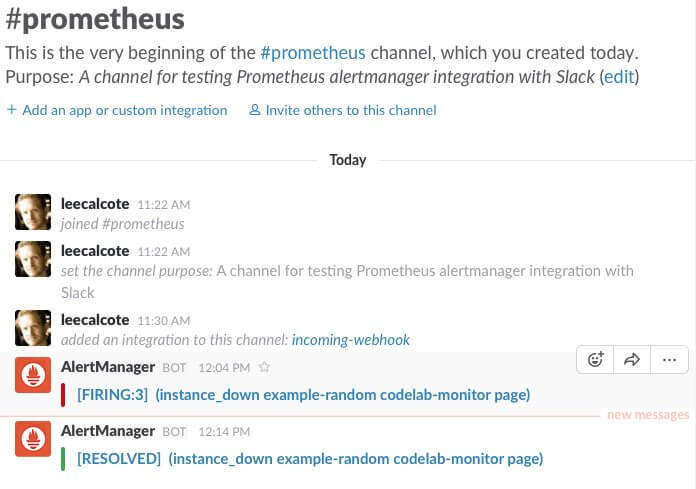

- Optionally, fired alerts will be notified to a Slack channel.

Stretch:

Include p agination

Add a date range picker

Add a host filter

Environment

test setup

Random Sample Targets

$ git clone https://github.com/prometheus/client_golang.git

$ cd client_golang/examples/random

$ go get -d

$ go build

Fetch and compile the client library code example.

Start example targets in separate terminals.

$ ./random -listen-address=:8080

$ ./random -listen-address=:8081

$ ./random -listen-address=:8082

Be sure to create and run the random sample targets and point it at your soon-to-be AlertManager:

Prometheus and Alert Rules Setup

Follow the getting started instructions to download, configure and run Prometheus.

$ ./prometheus -config.file=prometheus.yml -alertmanager.url=http://localhost:9093

ALERT instance_down

IF up == 0

FOR 5s

LABELS {severity="page"}

ANNOTATIONS {

DESCRIPTION="{{$labels.instance}} of job {{$labels.job}}

has been down for more than 5 seconds.",

SUMMARY="Instance {{$labels.instance}} down"}

/alert.rules

A simple alert rule that will fire when any given target is unreachable for longer than 5 seconds.

!

...

# Load and evaluate rules in this file every 'evaluation_interval' seconds.

rule_files:

- "alert.rules"

...

/prometheus.yml

Environment

development setup

Grab Repos

$ git clone https://github.com/prometheus/alertmanager.git

Given that our user story includes making front-end changes to AlertManager, ensure that you install a small utility to generate Go code from any file.

Clone AlertManager repo

Get, build and copy go-bindata into any directory on your PATH

$ go get -u github.com/jteeuwen/go-bindata/...

$ cd $GOPATH/src/github.com/jteeuwen/go-bindata/go-bindata

$ go buildNotification Integration

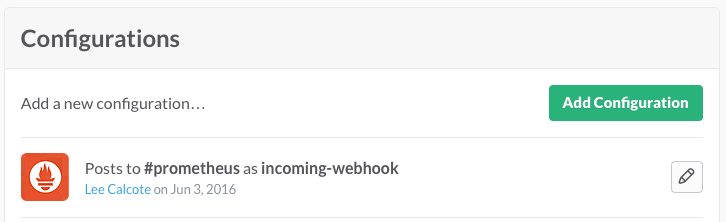

create an alert notification receiver.

route:

group_by: [cluster]

# If an alert isn't caught by a route, send it slack.

receiver: slack_general

routes:

# Send severity=slack alerts to slack.

- match:

severity: page

receiver: slack_general

receivers:

- name: slack_general

slack_configs:

- api_url: '<your-web-url-here>'

channel: '#<your-channel-name-here>'

send_resolved: true

Of the supported AlertManager receivers, let’s opt for integrating Slack.

The visual editor can assist in building routing trees.

Build, Run, Test

Verify you have a functional development environment by building and running the project:

$ make assets # invokes go-bindata to inject static web files

$ go build # compiles go code

$ ./alertmanager -config.file=slack.yml # runs alertmanager with the specified configuration

$ curl -X POST http://localhost:9090/-/reload

$ kill -HUP `pgrep alertmanager`

$ ./promtool check-config <config file>

$ ./promtool check-rules <rules file>

Reload Prometheus or AlertManager configs

Validate Prometheus config and alert rules

If you choose to setup a Slack channel, you should now see new alerts firing as and when your random targets go up and down.

Test

/ui/app/js/app.js

Changelog

/api.go

/ui/app/partials/history.html

Angular

HTML

Go

Go & SQL

/provider/provider.go

/provider/sqlite/sqlite.go

/provider/boltmem/boltmem.go

/api.go

All UI functionality should be addressable via API.

Let’s register a new /history API endpoint:

r.Get("/history", ihf("history", api.listAllAlerts))

func (api *API) listAllAlerts(w http.ResponseWriter, r *http.Request) {

alerts := api.alerts.GetAll()

defer alerts.Close()

var (

err error

res []*types.Alert

)

for a := range alerts.Next() {

if err = alerts.Err(); err != nil {

break

}

res = append(res, a)

}

if err != nil {

respondError(w, apiError{

typ: errorInternal,

err: err,

}, nil)

return

}

respond(w, types.Alerts(res...))

}

With our /api/v1/history endpoint a newly addressable API endpoint, we’ll need to build a function to handle requests made to it.

The api.listAllAlerts function will handle inbound HTTP requests made to the new endpoint.

- Add a new AlertIterator (e.g. GetAll() AlertIterator) to /provider/provider.go

- Add a new AlertProvider and SQL query to /provider/sqlite/sqlite.go

-

Add a new AlertIterator and AlertProvider to /provider/boltmem/boltmem.go

With API endpoint, let’s turn our attention to the backend for collecting the right recordset from our data provider.

/provider

/ui/app/js/app.js

angular.module('am.controllers').controller('NavCtrl',

function($scope, $location) {

$scope.items = [{

name: 'History',

url: 'history'

},

angular.module('am.services').factory('History',

function($resource) {

return $resource('', {}, {

'query': {

method: 'GET',

url: 'api/v1/history'

}

});

}

);

NavCtrl for the History menu item:

as well as a new History service:

angular.module('am.controllers').controller('HistoryCtrl',

function($scope, History) {

$scope.refresh = function () {

History.query({},

function(data) {

$scope.groups = data.data;

console.log($scope.groups);

}, function(data) {

console.log(data.data);

})

}

$scope.refresh(); } );

and a new History controller:

angular.module('am.directives').directive('history',

function() {

return {

restrict: 'E',

scope: {

alert: '=',

group: '='

},

templateUrl: 'app/partials/history.html'

}; } );

Insert a new History directive:

Finally, we’ll need a page in which to view the transpired alerts. So, create a new file, history.html, under /ui/app/partials.

History.html will simply format the display a tabular recordset. A new recordset will be retrieved from our data provider.

/ui/app/partials/history.html

Summary

This example enhancement provides a view of transient history — that of the period that the SQlite database holds.

AlertManager is not currently intended to provide long-term storage.

Contributing is easier than you may think.

Reference

Resources

IRC: #prometheus on irc.freenode.net

Mailing lists:

- prometheus-users – discussing Prometheus usage and community support

- prometheus-developers – contributing to Prometheus development

Prometheus repositories to file bugs and features requests

#

Lee Calcote

Thank you. Questions?

clouds, containers, infrastructure,

applications and their management

yes, we're hiring