Introduction to Container Networking

Lee Calcote

October 2017

CS378: Virtualization

The University of Texas at Austin

eth0

eth1

container

network namespace

Host

l0

loopback 0

host network namespace

Lee Calcote

clouds, containers, functions, applications and their management

Show of Hands

Container Networking

...it's complicated.

Preset Expectations

Reliability & Performance

- same demands and measurements

-

developer-friendly and application-driven

-

simple to use and deploy for developers and operators

better or on par with existing

virtual networking

Experience & Management

intent-based networking

Container Networking Specifications

Very interesting

but no need to actually know these

Container Network Model (CNM)

...is a specification proposed by CoreOS and adopted by projects such as rkt, Kurma, Kubernetes, Cloud Foundry, and Apache Mesos.

Plugins created by projects such as Weave, Project Calico, Plumgrid, Midokura and Contiv.

Container Networking Specifications

Container Network Model

Local Drivers

Docker Runtime

Bridge

Container Network Model (libnetwork)

Remote Drivers

None

Overlay

Third-party

MACvlan

Container Network Model

object model

Network Sandbox

Endpoint

Network

Container

Network Sandbox

Endpoint

Container

Network Sandbox

Endpoint

Container

Endpoint

Network

Docker Engine

Network Driver

Network Driver

IPAM Driver

Microsegmentation - traffic is not relayed

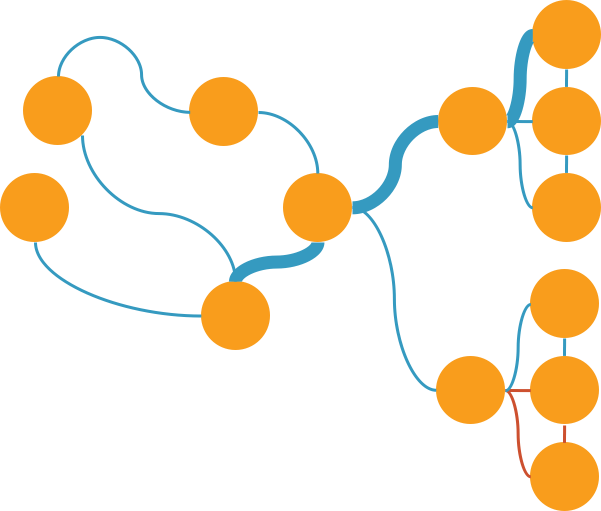

Container Network Interface

topology

Container Runtime

Container Network Interface

(CNI)

Loopback

Plugin

Bridge

Plugin

PTP

Plugin

MACvlan

Plugin

IPvlan

Plugin

Third-party

Plugin

Container Network Interface

interface creation flow

-

Container runtime needs to:

-

allocate a network namespace to the container and assign a container ID

-

pass along a number of parameters (CNI config) to network driver.

-

-

Network driver needs to:

-

attach container to a network

-

then, report the assigned IP address back to the container runtime (via JSON schema)

-

CNI Network

{

"name": "mynet",

"type": "bridge",

"bridge": "cni0",

"isGateway": true,

"ipMasq": true,

"ipam": {

"type": "host-local",

"subnet": "10.22.0.0/16",

"routes": [

{ "dst": "0.0.0.0/0" }

]

}

(JSON)

CNI and CNM

Similar in that each...

-

...are driver-based (

pluggable

), and therefore

- democratize the selection of which type of container networking

-

...allow multiple network drivers to be active and used concurrently

-

each provide a many-to-one mapping of network to network driver

-

-

...allow containers to join one or more networks.

-

...allow the container runtime to launch the network in its own namespace

-

segregate the application/business logic of connecting the container to the network to the network driver.

-

CNI and CNM

Different in that...

-

CNI supports any container runtime

- CNM only supports Docker runtime

- CNI is simpler, has adoption beyond its creator

-

CNM acts as a broker for conflict resolution

- CNI is still considering its approach to arbitration

Container Networking Solutions

Network Capabilities and Services

IPAM, multicast, broadcast, IPv6, load-balancing, service discovery, policy, quality of service, advanced filtering and performance...

...are all additional considerations to account for when selecting container networking that fits your needs.

IPv6 and IPAM

IPv6

-

lack of support for IPv6 in the top public clouds

-

reinforces the need for other networking types (overlays and fan networking)

-

some tier 2 public cloud providers offer support for IPv6

-

IPAM

-

most container runtime engines default to host-local for assigning addresses to containers as they are connected to networks.

-

Host-local IPAM involves defining a fixed block of IP addresses to be selected.

-

DCHP is universally supported across the container networking projects.

-

CNM and CNI both have IPAM built-in and plugin frameworks for integration with IPAM systems

iptables

Container AA

Container A

kube-proxy

kube-proxy

Node A

Node B

Client

Pod A

Service A

iptables

NodePort

NodePort+LoadBalancer

Container BB

Container B

Pod B

Service B

iptables

Container AA

Container A

Ingress

Controller

kube-proxy

kube-proxy

Node A

Node B

Client

Pod A

Ingress B

Service A

iptables

Inbound

Outbound

Ingress Controller

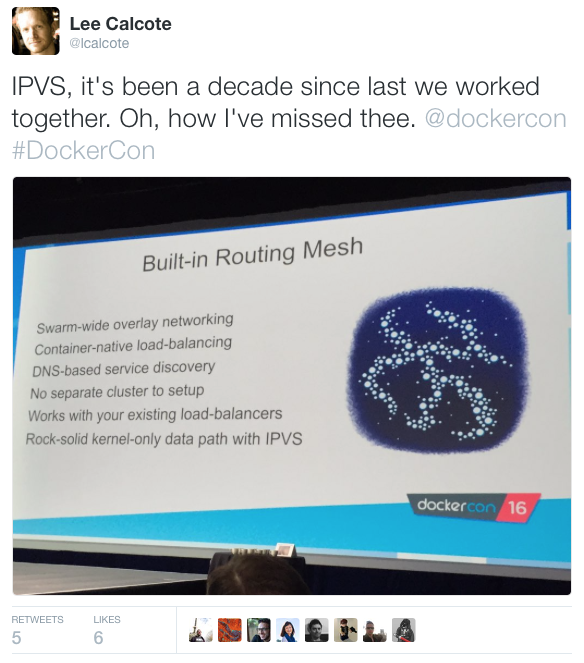

Kubernetes traffic flow with

Docker 1.12 (Load-balancing)

-

Swarm and multi-host networking are simpatico

-

user-defined overlay networks that are micro-segmentable

-

uses Hashicorp's Serf gossip protocol for quick convergence of neighbor tables between hosts

-

facilitates container name resolution via embedded DNS server (previously via etc/hosts)

-

-

Load-balancing based on IPVS

-

expose Service's port externally; L4 load-balancer

-

-

Mesh routing

-

send a request to any one of the nodes and it will be routed automatically

-

send a request to any one of the nodes and it will be internally load balanced

-

a massive leap forward

with a small step back - a cluster-wide namespace for port publishing

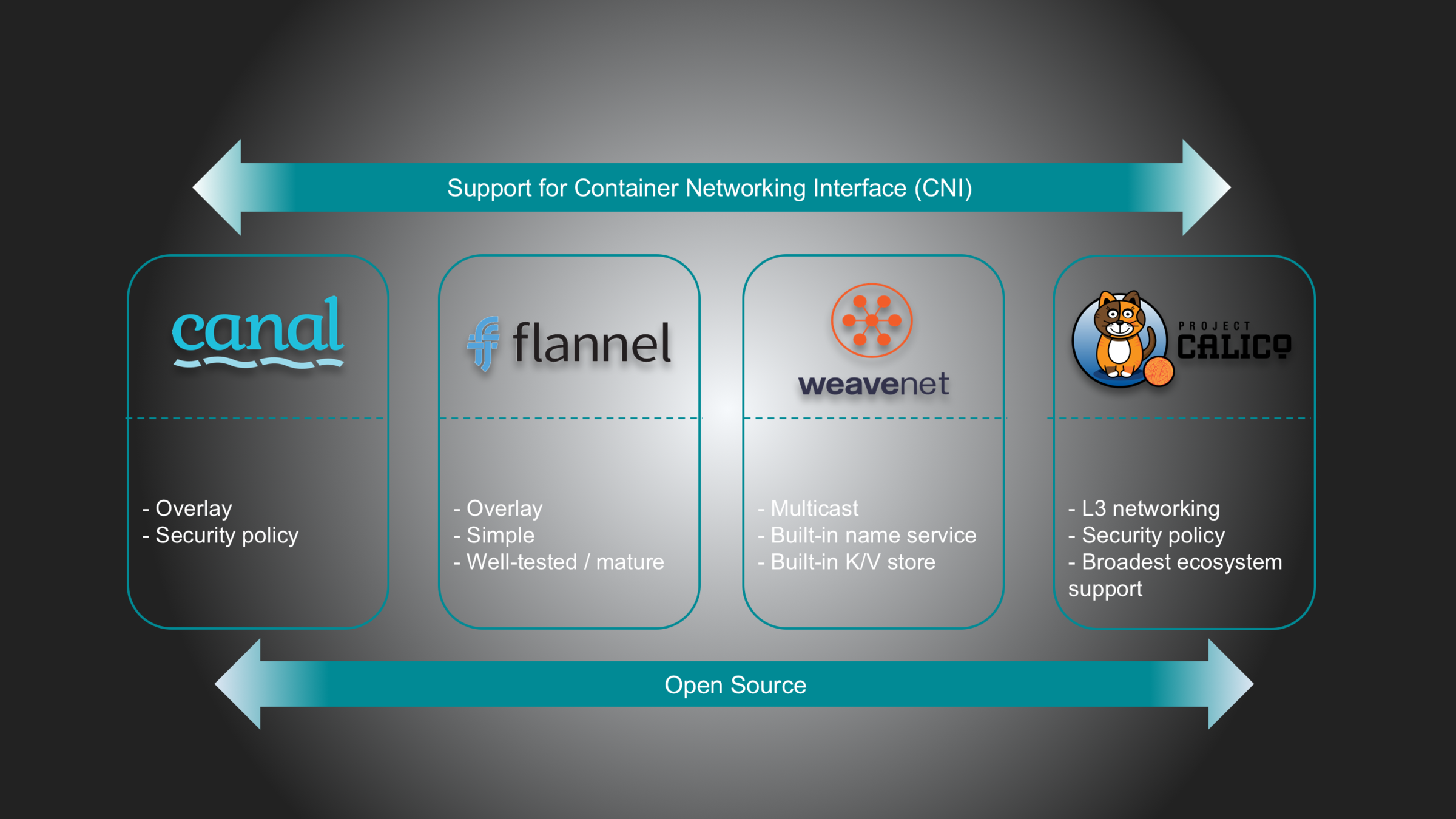

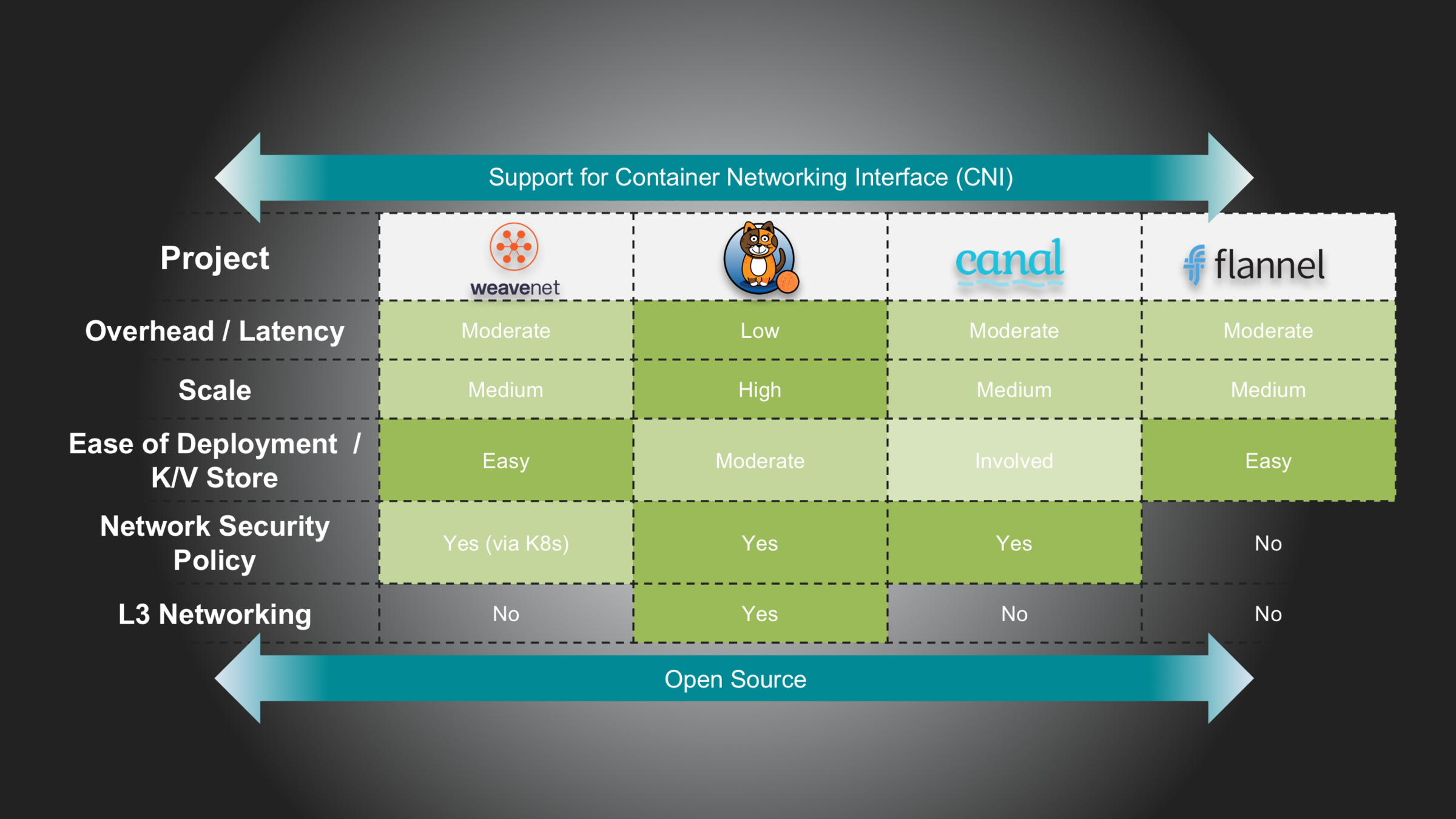

Docker Overlay - VXLAN overlay

Calico - L3 w/optional encapsulation

Flannel - VXLAN or UDP Overlay

Weave Net - VXLAN or UDP Overlay

Canal - VXLAN or UDP Overlay

Romana - L3

Cilium - L3 w/optional encapsulation

Trireme - L3 w/TLS

Contiv - L2, L3 (BGP), VXLAN Overlay

VMware NSX - VXLAN UDP Overlay

SolarWinds - All

Container Networking Solutions

See additional research.

-

Nearly all are open source

-

Use a variety of technologies

-

VXLAN

-

UDP Overlay

-

L2

-

L3

-

with or w/o TLS

-

BGP

-

-

IP routed

-

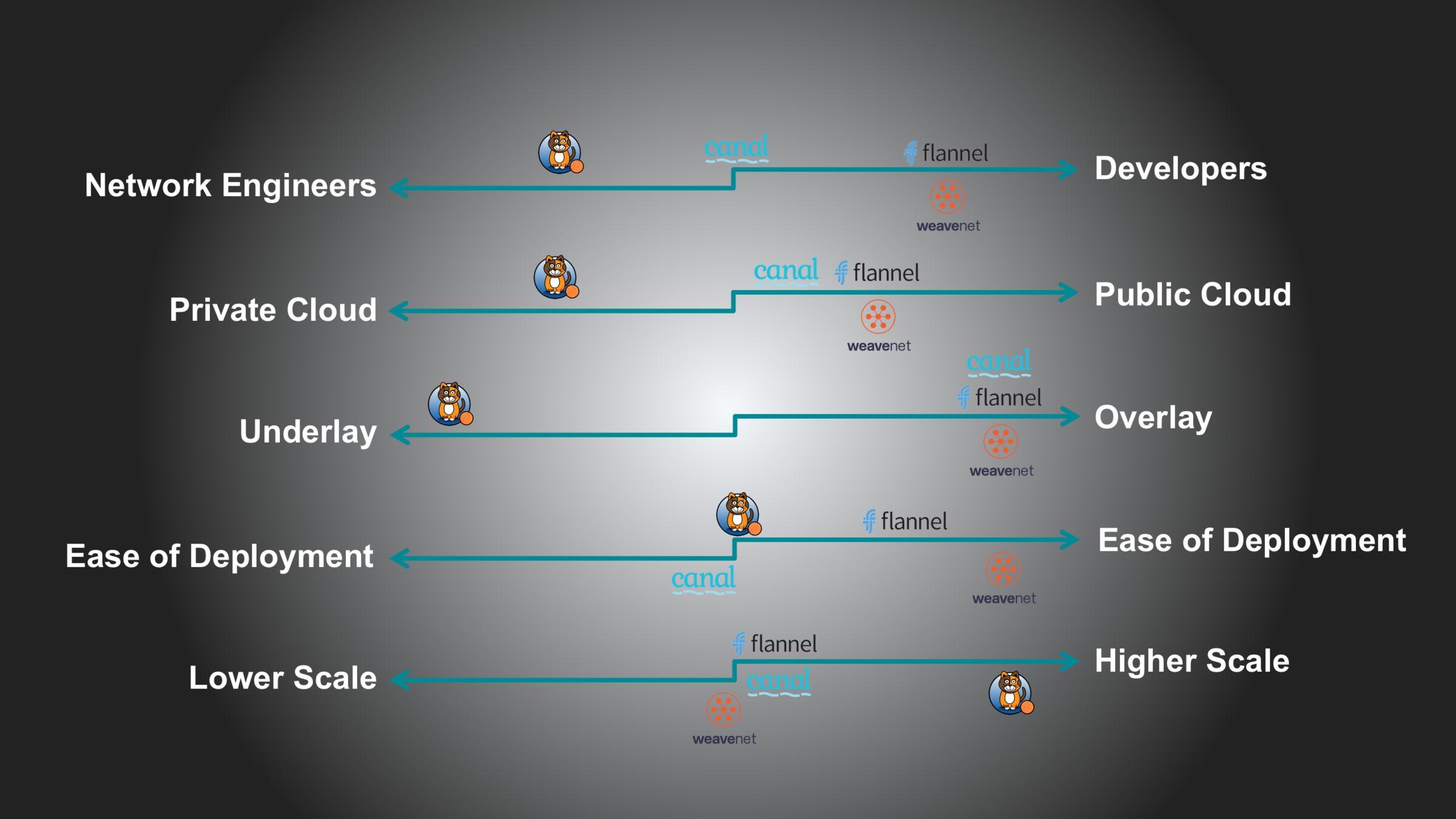

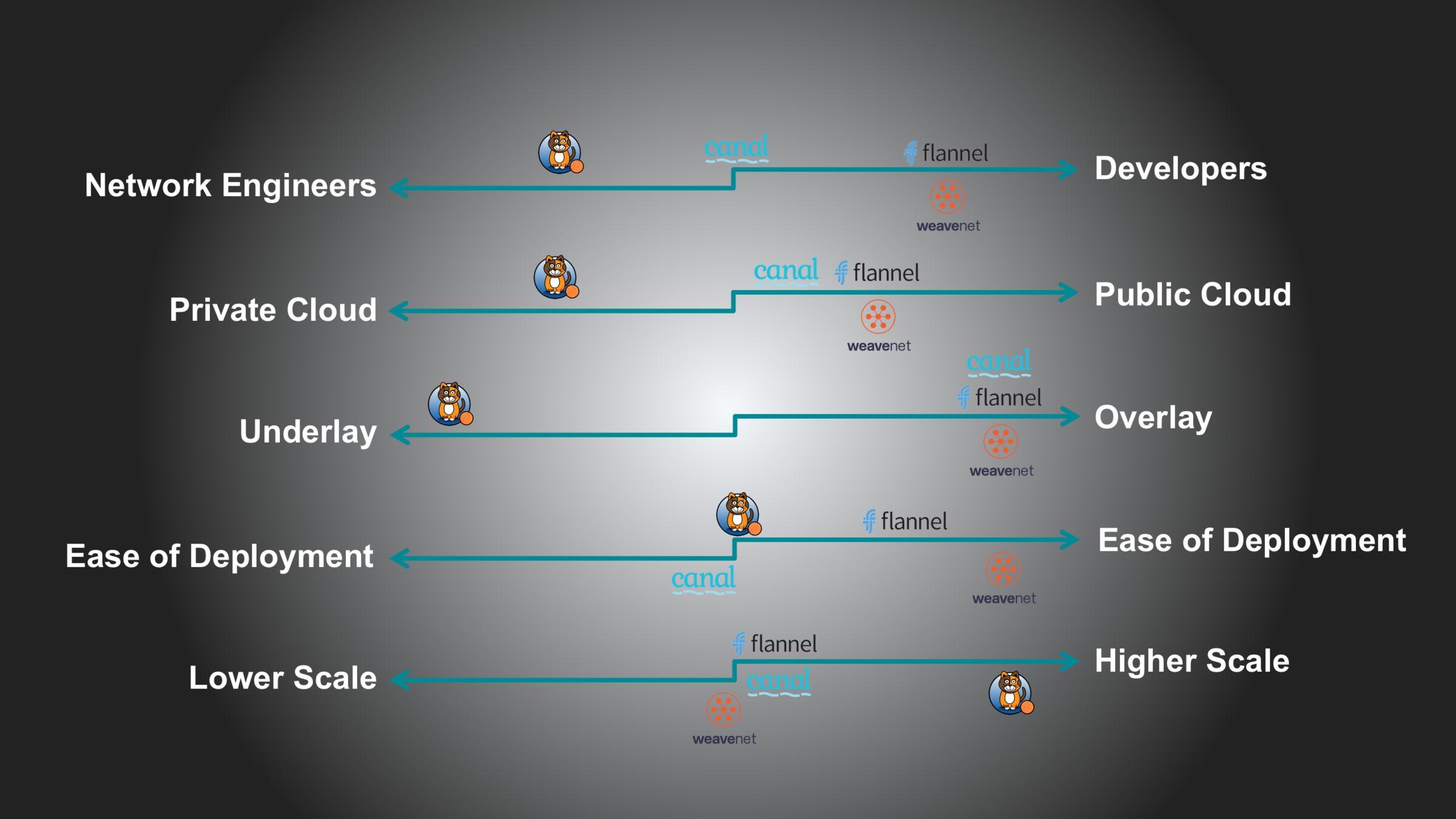

Project strengths

Text

Spectra of strength and focus

Critical Capabilities

Types of Container Networking

- None

- Links and Ambassadors

- Container-mapped

- Bridge

- Overlay

- Underlay

- Host

- MACvlan

- IPvlan

- Direct Routing

- Point-to-Point

- Fan Networking

network namespace ≈ VRF

veth ≈ always come in pairs

None

container receives a network stack, but lacks an external network interface.

it does, however, receive a loopback interface.

good ol' l0

eth0

eth1

container

network namespace

Host

l0

loopback 0

host network namespace

Bridge

- default networking for Docker

- uses a host-internal network

- leverages iptables for network address translation (NAT) and port-mapping

Ah, yes, docker0

bridge

eth0

eth1

container

container

container

Host

Links

- facilitate

single

host connectivity

- "discovery" via /etc/hosts or env vars

Ambassadors

- facilitate

multi-host

connectivity

- uses a tcp port forwarder (socat)

Web Host

MySQL

Ambassador

PHP

DB Host

PHP

Ambassador

MySQL

link

link

Container-Mapped

one container reuses (maps to) the networking namespace of another container.

may only be invoked when running a docker container (cannot be defined in Dockerfile):

--net=container=some_container_name_or_id

Host

container created shares its network namespace with the host

suffers port conflicts

secure?

better performance

easy to understand and troubleshoot

default Mesos networking mode

Overlay

the shadow IT network

use networking tunnels to delivery communication across hosts

-

Most useful in hybrid cloud scenarios

- or when shadow IT is needed

-

Many tunneling technologies exist

- VXLAN being the most commonly used

- Requires distributed key-value store

K/V Store for Overlays

BYOKV?

-

Docker -

-

1.11 requires K/V store

-

built-in as of 1.12

-

uses raft implementation from etcd

-

uses gomemdb and Serf from HashiCorp

-

-

-

WeaveNet - limited to single network; does not require K/V store

-

WeaveMesh - does not require separate K/V store

-

Flannel - requires K/V store

-

Plumgrid - requires K/V store; built-in and not pluggable

-

Midokura - requires K/V store; built-in and not pluggable

- Calico - requires K/V store

- NSX - requires K/V store

- Romana - requires K/V store

- Cilium - requires K/V store

Underlays

expose host interface(s) directly to container(s)

(e.g. the physical network interface at eth0)

- MACvlan

- IPvlan

- Direct Routing

- Fan Networking

not necessarily public cloud friendly

MACvlan

-

allows creation of multiple virtual network interfaces behind the host’s physical interface

-

Each virtual interface has unique MAC and IP addresses assigned

-

with restriction: the assigned IP address needs to be in the same broadcast domain as the physical interface (not trunking)

-

-

eliminates the need for the Linux bridge, NAT and port-mapping

-

allowing you to connect directly to physical interface

-

switchport port-security mac-address sticky

l2/l3 physical underlay

eth0

container

container

Host

eth0. 20

eth0. 30

MACvlan 30

MACvlan 20

eth0:

10.30.0.2

802.1q trunk

VLAN 20

10.0.20.0/24

VLAN 30

10.0.30.0/24

eth0:

10.0.20.2

promiscuous mode required

IPvlan

-

allows creation of multiple virtual network interfaces behind a host’s physical interface

-

Each virtual interface has unique IP addresses assigned

-

Same MAC address used for all containers

-

-

L2-mode containers must be on same network as host (similar to MACvlan)

-

L3-mode containers must be on different network than host

-

Network advertisement and redistribution into the network still needs to be done.

-

MACvlan and IPvlan

-

While multiple modes of networking are supported on a given host, MACvlan and IPvlan can’t be used on the same physical interface concurrently.

-

ARP and broadcast traffic, the L2 modes of these underlay drivers operate just as a server connected to a switch does by flooding and learning using 802.1d packets

-

IPvlan L3-mode - No multicast or broadcast traffic is allowed in.

-

In short, if you’re used to running trunks down to hosts, L2 mode is for you.

-

If scale is a primary concern, L3 has the potential for massive scale.

Direct Routing

-

Resonates with network engineers

-

Leverage existing network infrastructure

-

Use routing protocols for connectivity; easier to interoperate with existing data center across VMs and bare metal servers

-

Better scaling

-

More granular control over filtering and isolating network traffic

-

Easier traffic engineering for quality of service

-

-

Easier to diagnose network issues

Point-to-Point

default rkt networking mode

-

Creates a virtual ethernet pair

-

placing one on the host and the other into the container pod

-

placing one on the host and the other into the container pod

-

Leverages iptables to provide port-forwarding for

inbound traffic to the pod and

NAT (IPMASQ)

- Internal communication between other containers in the pod happens over the loopback interface

veth pair

eth0

container

container

Host

Pod

iptables

loopback 0

l2/l3 physical network

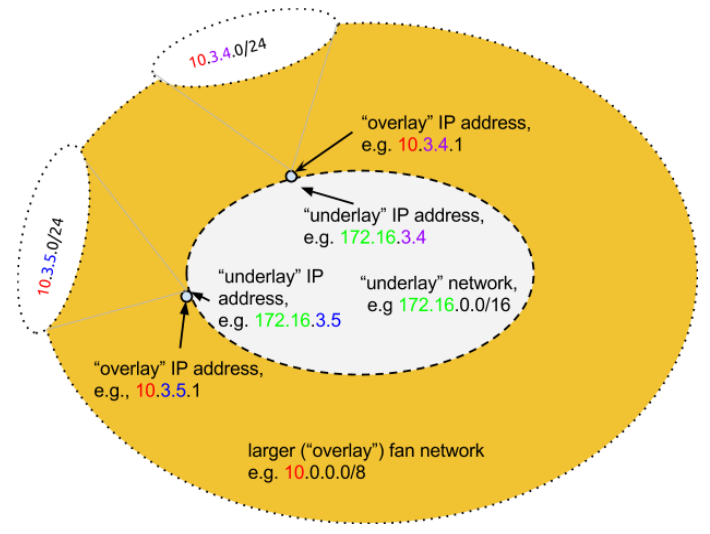

Fan Networking

a way of gaining access to many more IP addresses, expanding from one assigned IP address to 250 more IP addresses

-

“address expansion” - multiplies the number of available IP addresses on the host, providing an extra 253 usable addresses for each host IP

-

Fan addresses are assigned as subnets on a virtual bridge on the host,

-

IP addresses are mathematically mapped between networks

-

-

uses IP-in-IP tunneling; high performance

-

particularly useful when running containers in a public cloud

-

where a single IP address is assigned to a host and spinning up additional networks is prohibitive or running another load-balancer instance is costly

-

diagram courtesy Dustin Kirkland

Project

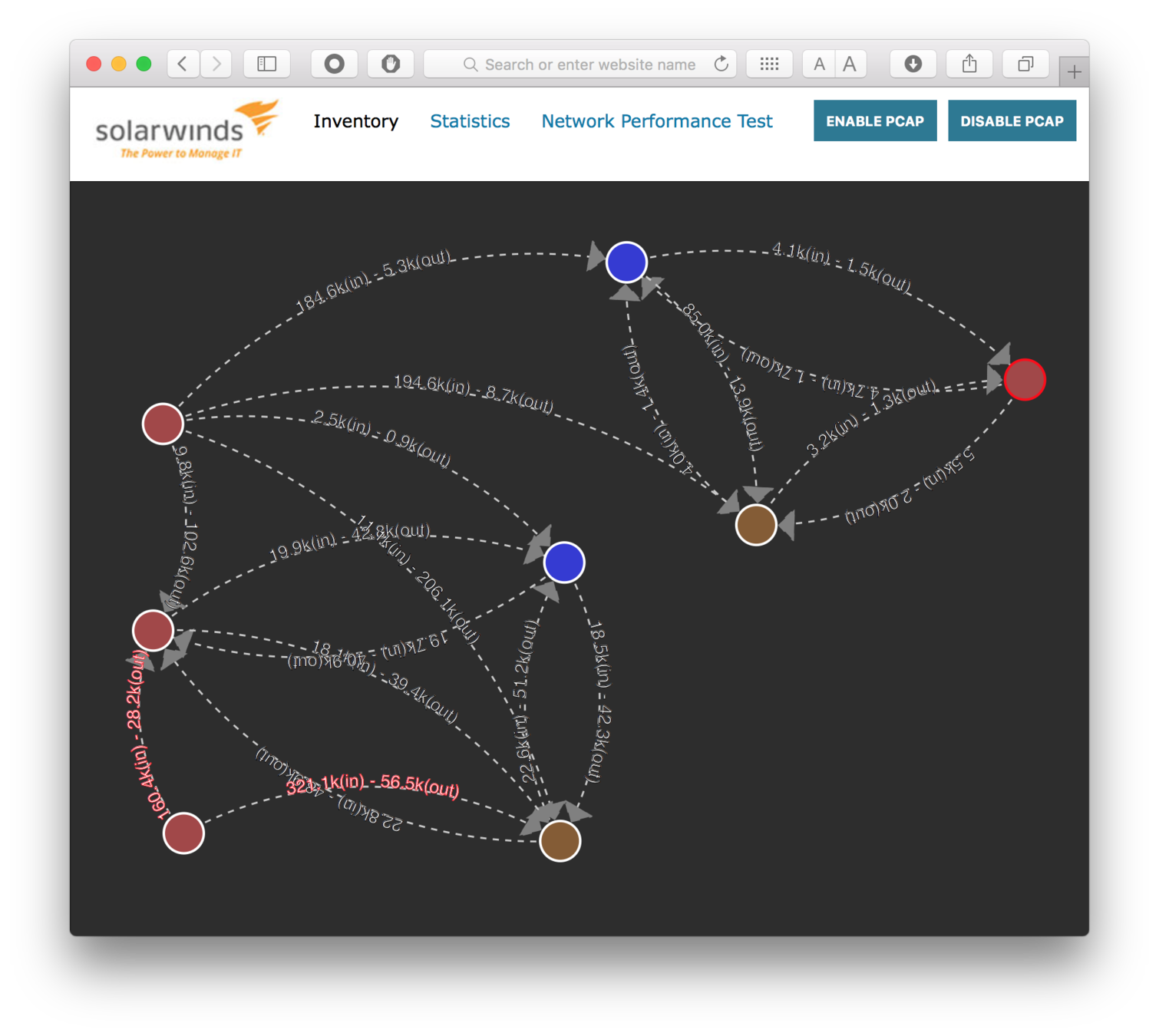

Container Network Performance Tool

Learn more -

Preview

Project

Container Network Performance Tool

Cluster visibility -

See container network flows (current bandwidth and direction) across Kubernetes and Docker Swarm nodes.

Bandwidth test -

Test throughput (performance) of each type of container network (compare network drivers).

Network Observations -

Insight on network flows and recommendations on scheduling.

Preview

Learn more -

Lee Calcote

Thank you. Questions?

clouds, containers, functions,

applications and their management