Characterizing and Contrasting Kuhn-tey-ner

Awr-kuh-streyt-ors

All Things Open, October 2016

Lee Calcote

Lee Calcote

clouds, containers, infrastructure,

applications and their management

Show of Hands

[k uh n- tey -ner]

[ awr -k uh -streyt-or]

Definition:

Fleet

Nomad

Swarm

Kubernetes

Mesos+Marathon

CaaS

(Stay tuned for updates to presentation and book)

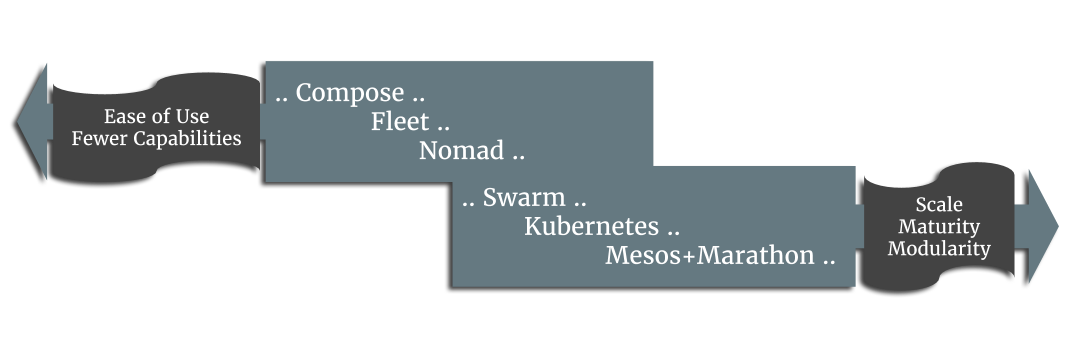

One size does not fit all.

A strict apples-to-apples comparison is inappropriate and not the objective, hence characterizing and contrasting.

Let's not go here today.

Container orchestrators may be in te rm ix ed.

Categorically Speaking

-

Genesis & Purpose

-

Support & Momentum

-

Host & Service Discovery

-

Scheduling

-

Modularity & Extensibility

-

Updates & Maintenance

-

Health Monitoring

-

Networking & Load-Balancing

-

High Availability & Scale

Hypervisor

Manager

Elements

-

Compute

-

Network

-

Storage

Container

Orchestrator

Elements

- Host (Node)

- Container

- Task

- Service

- Volume

- Applications

≈

≈

Core

Capabilities

-

Cluster Management

-

Host Discovery

-

Host Health Monitoring

-

-

Scheduling

-

Orchestrator Updates and Host Maintenance

-

Service Discovery

-

Networking and Load-Balancing

Additional

Key Capabilities

-

Application Health Monitoring

-

Application Deployments

-

Application Performance Monitoring

Nomad

Genesis & Purpose

- designed for both long lived services and short lived batch processing workloads.

- cluster manager with declarative job specifications.

- ensures constraints are satisfied and resource utilization is optimized by efficient task packing.

- supports all major operating systems and virtualized, containerized or standalone workloads.

- written in Go.

Support & Momentum

- Project began June 2015 has 113 contributors over 16 months

-

Current release v0.4

-

v0.5 to be in a week or so

-

Nomad Enterprise offering aimed for Q1-Q2 next year.

-

-

Supported and governed by HashiCorp

-

Hashiconf US '15 had ~300 attendees

-

Hashiconf EU '16 had ~320 attendees

-

HashiConf US '16 had ~ 500 attendees

-

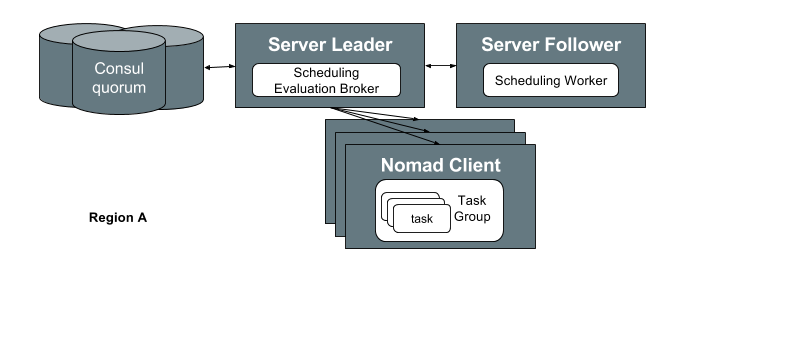

Nomad Architecture

Nomad is a single binary, both for clients and servers, and requires no external services for coordination or storage.

Host &

Service Discovery

Host Discovery

-

Gossip protocol - Serf is used

-

Docker multi-host networking and Swarmkit use Serf, too

-

-

Servers advertise full set of Nomad servers to clients via heartbeats every 30 seconds

-

Creating federated clusters is simple

Service Discovery

-

Nomad integrates with Consul to provide service discovery and monitoring.

-

Scheduling

- two distinct phases, feasibility checking and ranking.

-

optimistically concurrent

-

enabling all servers to participate in scheduling decisions which increases the total throughput and reduces latency

-

-

three scheduler types used when creating jobs:

-

service, batch and system

-

nomad plan point-in-time-view of what Nomad will do

-

Modularity & Extensibility

Task drivers

-

Used by Nomad clients to execute a task and provide resource isolation.

-

By having extensible task drivers are important for flexibility to support a broad set of workloads.

-

Does not currently support pluggable task drivers,

-

Have to iImplement task driver interface and compile Nomad binary.

-

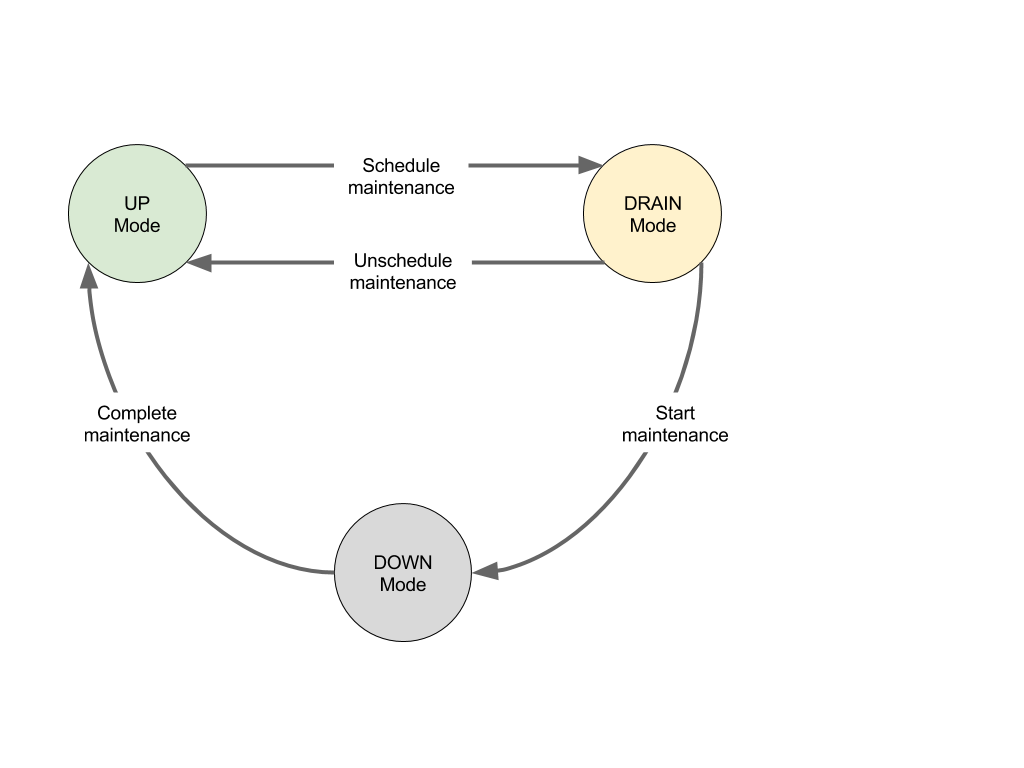

Updates &

Maintenance

Nodes

-

Drain allocations on a running node.

-

integrates with tools like Packer, Consul, and Terraform to support building artifacts, service discovery, monitoring and capacity management.

Applications

-

Log rotation (stderr and stdout)

-

no log forward s upport , yet

-

-

Rolling updates (via the `update` block in the job specification).

Health Monitoring

Nodes

- Node health monitoring is done via heartbeats, so Nomad can detect failed nodes and migrate the allocations to other healthy clients.

Applications

-

currently http, tcp and script

-

In the future Nomad will add support for more Consul checks.

-

nomad alloc-status reports actual resource utilization

Networking & Load-Balancing

Networking

-

Dynamic ports are allocated in a range from 20000 to 60000.

-

Shared IP address with Node

Load-Balancing

-

Consul provides DNS-based load-balancing

Secrets Management

- Nomad agents provide secure integration with Vault

- for all tasks and containers it spins up

- gives secure access to Vault secrets through a workflow which minimizes risk of secret exposure during bootstrapping.

High Availability & Scale

-

distributed and highly available, using both leader election and state replication to provide availability in the face of failures.

-

shared state optimistic scheduler

-

only open source implementation.

-

only open source implementation.

- 1,000,0000 across 5,000 hosts and scheduled in 5 min.

-

Built for managing multiple clusters / cluster federation.

- easier to use

- a single binary for both clients and servers

- supports different non-containerized tasks

- arguably the most advanced scheduler design

- upfront consideration of federation / hybrid cloud

- broad OS support

-

Outside of scheduler, comparatively less sophisticated

-

Young project

-

Less relative momentum

-

Less relative adoption

-

Less extensible / pluggable

Docker Swarm

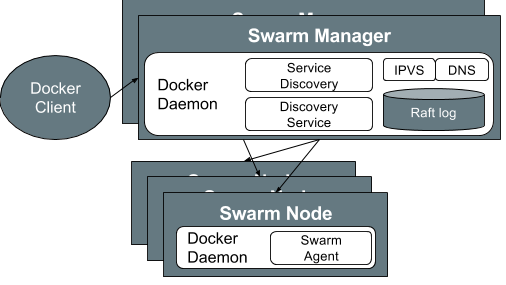

Docker Swarm 1.12

aka

Swarmkit or Swarm mode

Genesis & Purpose

-

Swarm is simple and easy to setup.

-

Responsible for the clustering and scheduling aspects of orchestration.

-

Originally an imperative system, now declarative

-

Swarm’s architecture is not complex as those of Kubernetes and Mesos

-

Written in Go, Swarm is lightweight, modular and extensible

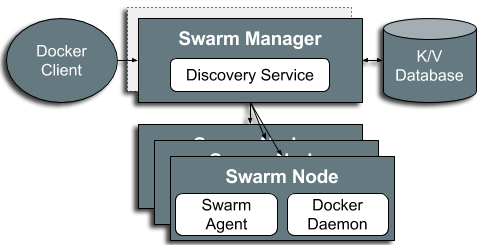

Docker Swarm 1.11 (Standalone)

Docker Swarm Mode 1.12 (Swarmkit)

Support & Momentum

-

Contributions:

-

Standalone: ~3,000 commits, 12 core maintainers (140 contributors)

-

Swarmkit: ~2,000 commits, 12 core maintainers (40 contributors)

-

-

~250 Docker meetups worldwide

-

Production-ready:

-

Standalone announced ~12 months ago (Nov 2015)

-

Swarmkit announced ~3 month ago (July 2016)

-

Host & Service Discovery

Host Discovery

-

used in the formation of clusters by the Manager to discover for Nodes (hosts).

-

Like Nomad, uses Hashicorp's go MemDB for storing cluster state

-

Pull model - where worker checks-in with the Manager

-

Rate Control - of checks-in with Manager may be controlled at Manager - add jitter

-

Workers don't need to know which Manager is active; Follower Managers will redirect Workers to Leader

Service Discovery

-

Embedded DNS and round robin load-balancing

-

Services are a new concept

Scheduling

-

Swarm’s scheduler is pluggable

-

Swarm scheduling is a combination of strategies and filters/constraint:

-

Strategies

-

Random, Binpack

-

Spread*

-

Plugin?

-

-

Filters

-

container constraints (affinity, dependency, port) are defined as environment variables in the specification file

-

node constraints (health, constraint) must be specified when starting the docker daemon and define which nodes a container may be scheduled on.

-

-

Swarm Mode only supports Spread

Modularity & Extensibility

Ability to remove batteries is a strength for Swarm:

-

Pluggable scheduler -

Pluggable network driver

-

Pluggable distributed K/V store -

Docker container engine runtime-only

-

Pluggable authorization (in docker engine)*

Updates & Maintenance

Nodes

-

Nodes may be Active, Drained and Paused

-

Manager weights are used to drain or pause Managers

-

-

Manual swarm manager and worker updates

Applications

-

Rolling updates now supported

-

--update-delay

-

--update-parallelism

-

--update-failure-action

-

Health Monitoring

Nodes

-

Swarm monitors the availability and resource usage of nodes within the cluster

Applications

- One health check per container may be run

-

check container health by running a command inside the container

- --interval=DURATION (default: 30s)

- --timeout=DURATION (default: 30s)

- --retries=N (default: 3)

-

check container health by running a command inside the container

Networking & Load-Balancing

-

Swarm and Docker’s multi-host networking are simpatico

-

provides for user-defined overlay networks that are micro-segmentable

-

uses a gossip protocol for quick convergence of neighbor table

-

facilitates container name resolution via embedded DNS server (previously via etc/hosts)

-

-

You may bring your own network driver

-

Load-balancing based on IPVS

-

expose Service's port externally

-

L4 load-balancer; cluster-wide port publishing

-

-

Mesh routing

-

-

send a request to any one of the nodes and it will be routed automatically

-

send a request to any one of the nodes and it will be internally load balanced

-

Secrets Management

Not yet...

tracking toward 1.13

High Availability & Scale

-

Managers may be deployed in a highly-available configuration

-

Active/Standby - only one active Leader at-a-time

-

Maintain odd number of managers

-

-

Rescheduling upon node failure

-

No r ebalancing upon node addition to the cluster

-

-

Does not support multiple failure isolation regions or federation

-

although, with caveats, federation is possible.

-

Scaling swarm to 1,000 AWS nodes and 50,000 containers

-

Suitable for orchestrating a combination of infrastructure containers

-

Has only recently added capabilities falling into the application bucket

-

-

Swarm is a young project

-

advanced features forthcoming

-

natural expectation of caveats in functionality

-

-

No rebalancing, autoscaling or monitoring, yet

-

Only schedules Docker containers, not containers using other specifications.

-

Does not schedule VMs or non-containerized processes

-

Does not provide support for batch jobs

-

-

Need separate load-balancer for overlapping ingress ports

-

While dependency and affinity filters are available, Swarm does not provide the ability to enforce scheduling of two containers onto the same host or not at all.

-

Filters facilitate sidecar pattern. No “pod” concept.

-

-

Swarm works. Swarm is simple and easy to deploy.

-

1.12 eliminated need for much, but not all third-party software

-

Facilitates earlier stages of adoption by organizations viewing containers as faster VMs

-

now with built-in functionality for applications

-

-

Swarm is easy to extend, if can already know Docker APIs, you can customize Swarm

-

Still modular, but has stepped back here.

-

Moving very fast; eliminating gaps quickly.

Kubernetes

Genesis & Purpose

-

an opinionated framework for building distributed systems

-

or as its tagline states "an open source system for automating deployment, scaling, and operations of applications."

-

-

Written in Go, Kubernetes is lightweight, modular and extensible

-

considered a third generation container orchestrator led by Google, Red Hat and others.

-

bakes in load-balancing, scale, volumes, deployments, secret management and cross-cluster federated services among other features.

-

-

Declaratively, opinionated with many key features included

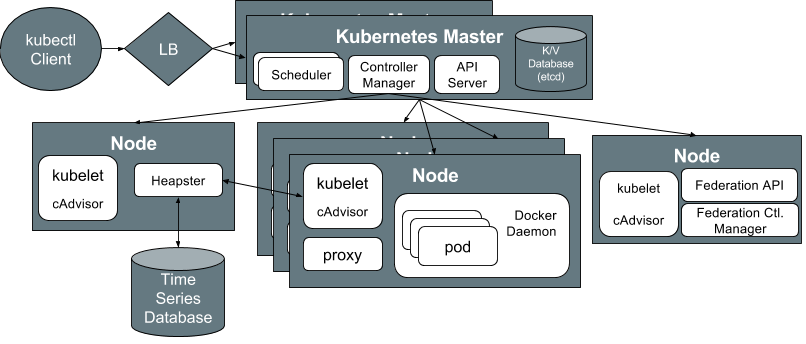

Kubernetes Architecture

Support & Momentum

-

Kubernetes is young (about two years old)

-

Announced as production-ready 15 months ago (July 2015)

-

-

Project currently has over 1,000 commits per month (~38,000 total)

-

made by about 100 (862 total) Kubernauts (Kubernetes enthusiasts)

-

~5,000 commits made in 1.3 release (1.4 is latest)

-

-

Under the governance of the Cloud Native Computing Foundation

-

Robust set of documentation and ~90 meetups

Host & Service Discovery

Host Discovery

-

by default, the node agent (kubelet) is configured to register itself with the master (API server)

-

automating the joining of new hosts to the cluster

-

Service Discovery

Two primary modes of finding a Service

-

DNS

-

SkyDNS is deployed as a cluster add-on

-

-

environment variables

-

environment variables are used as a simple way of providing compatibility with Docker links-style networking

-

Scheduling

-

By default, scheduling is handled by kube-scheduler.

-

Pluggable

-

Selection criteria used by kube-scheduler to identify the best-fit node is defined by policy:

-

Predicates (node resources and characteristics):

-

PodFitPorts , PodFitsResources, NoDiskConflict , MatchNodeSelector, HostName , ServiceAffinit, LabelsPresence

-

-

Priorities (weighted strategies used to identify “best fit” node):

-

LeastRequestedPriority, BalancedResourceAllocation, ServiceSpreadingPriority, EqualPriority

-

-

Modularity &

Extensibility

-

One of Kubernetes strengths its pluggable architecture and it being an extensible platform

-

Choice of:

-

database for service discovery or network driver

-

container runtime

-

users may choose to run Docker with Rocket containers

-

-

-

Cluster add-ons

-

optional system components that implement a cluster feature (e.g. DNS, logging, etc.)

-

shipped with the Kubernetes binaries and are considered an inherent part of the Kubernetes clusters

-

Updates & Maintenance

Applications

-

Deployment objects automate deploying and rolling updating applications.

-

Support for rolling back deployments

Kubernetes Components

-

Consistently backwards compatible

-

Upgrading the Kubernetes components and hosts is done via shell script

-

Host maintenance - mark the node as unschedulable.

-

existing pods are vacated from the node

-

prevents new pods from being scheduled on the node

-

Health Monitoring

Nodes

-

Failures - actively monitors the health of nodes within the cluster

-

via Node Controller

-

-

Resources - usage monitoring leverages a combination of open source components:

-

cAdvisor, Heapster, InfluxDB, Grafana

-

Applications

-

three types of user-defined application health-checks and uses the Kubelet agent as the the health check monitor

-

HTTP Health Checks, Container Exec, TCP Socket

-

Cluster-level Logging

-

collect logs which persist beyond the lifetime of the pod’s container images or the lifetime of the pod or even cluster

-

standard output and standard error output of each container can be ingested using a Fluentd agent running on each node

-

Networking & Load-Balancing

…enter the Pod

-

atomic unit of scheduling

-

flat networking with each pod receiving an IP address

-

no NAT required, port conflicts localized

-

intra-pod communication via localhost

Load-Balancing

-

Services provide inherent load-balancing via kube-proxy:

-

runs on each node of a Kubernetes cluster

-

reflects services as defined in the Kubernetes API

-

supports simple TCP/UDP forwarding and round-robin and Docker-links-based service IP:PORT mapping.

-

Secrets Management

-

Secrets are used by container in a pod either:

-

mounted as data volumes

-

exposed as environment variables

-

-

None of the pod’s containers will start until all the pod’s volumes are mounted.

-

Individual secrets are limited to 1MB in size.

- Secrets are created and accessible within a given namespace, not cross-namespace.

High Availability & Scale

-

Each master component may be deployed in a highly-available configuration.

-

Active/Standby configuration

-

-

Federated clusters / multi-region deployments

Scale

-

v1.2 support for 1,000 node clusters

-

v1.3 supports 2,000 node clusters

-

Horizontal Pod Autoscaling (via Replication Controllers).

-

Cluster Autoscaling (if you're running on GCE with AWS support is coming soon).

-

Only runs containerized applications

-

For those familiar with Docker-only, Kubernetes requires understanding of new concepts

-

Powerful frameworks with more moving pieces beget complicated cluster deployment and management.

-

-

Lightweight graphical user interface

-

Does not provide as sophisticated techniques for resource utilization as Mesos

-

Kubernetes can schedule docker or rkt containers

-

Inherently opinionated w/functionality built-in.

-

-

relatively easy to change its opinion

-

little to no third-party software needed

-

builds in many application-level concepts and services (petsets, jobsets, daemonsets, application packages / charts, etc.)

-

advanced storage/volume management

-

-

project has most momentum

-

project is arguably most extensible

-

thorough project documentation

-

Supports multi-tenancy

-

Multi-master, cross-cluster federation, robust logging & metrics aggregation

Mesos

+

Marathon

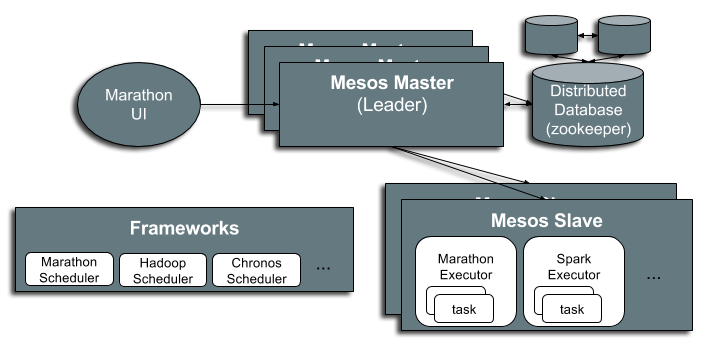

Genesis & Purpose

-

Mesos is a distributed systems kernel

-

stitches together many different machines into a logical computer

-

-

Mesos has been around the longest (launched in 2009)

-

and is arguably the most stable, with highest (proven) scale currently

-

-

Mesos is written in C++

-

with Java, Python and C++ APIs

-

-

Marathon as a Framework

-

Marathon is one of a number of frameworks (Chronos and Aurora other examples) that may be run on top of Mesos

-

Frameworks have a scheduler and executor. Schedulers get resource offers. Executors run tasks.

-

Marathon is written in Scala

-

Mesos Architecture

Support & Momentum

-

MesosCon 2015 in Seattle had 700 attendees

-

up from 262 attendees in 2014

-

-

Mesos had 78 contributors

-

Marathon had 217 contributors

-

Under the governance of Apache Foundation

-

Mesos is used by Twitter, AirBnb, eBay, Apple, Cisco, Yodle

-

Marathon is used by Verizon and Samsung

Host &

Service Discovery

-

Mesos-DNS generates an SRV record for each Mesos task

-

including Marathon application instances

-

-

Marathon will ensure that all dynamically assigned service ports are unique

-

Mesos-DNS is particularly useful when:

-

apps are launched through multiple frameworks (not just Marathon)

-

you are using an IP-per-container solution like Project Calico

-

you use random host port assignments in Marathon

-

Scheduling

-

Two level scheduler

-

First level scheduling happens at mesos master based on allocation policy , which decides which framework get resources.

-

Second level scheduling happens at Framework scheduler , which decides what tasks to execute.

-

-

Provide reservations, over-subscriptions and preemption.

Modularity & Extensibility

Frameworks

-

multiple available

-

may run multiple frameworks

Modules

-

extend inner workings of Mesos by creating and using shared libraries that are loaded on demand

-

many types of Modules

-

Replacement, Isolator, Allocator, Authentication, Hook, Anonymous

-

Updates & Maintenance

Nodes

- Mesos has maintenance mode

-

Mesos backwards compatible from v1.0 forward

-

Marathon ?

Applications

-

Marathon can be instructed to deploy containers based on that component using a blue/green strategy

-

where old and new versions co-exist for a time.

-

Health Monitoring

Nodes

-

Master tracks a set of statistics and metrics to monitor resource usage

Applications

-

support for health checks (HTTP and TCP)

-

an event stream that can be integrated with load-balancers or for analyzing metrics

Networking & Load-Balancing

Networking

-

An IP per Container

-

No longer share the node's IP

-

Helps remove port conflicts

-

Enables 3rd party network drivers

-

-

Container Network Interface (CNI) isolator with MesosContainerizer

Load-Balancing

-

Marathon offers two TCP/HTTP proxies

-

A simple shell script and a more complex one called marathon-lb that has more features.

-

Pluggable (e.g. Traefic for load-balancing)

-

Secrets Management

Not yet.

Only supported by Enterprise DC/OS

- Secrets shorter than eight characters may not be accepted by Marathon.

- By default, you cannot store a secret larger than 1MB.

High Availability & Scale

-

A strength of Mesos’s architecture

-

requires masters to form a quorum using ZooKeeper (point of failure)

-

only one Active (Leader) master at-a-time in Mesos and Marathon

-

-

Scale is a strong suit for Mesos. TBD for Marathon.

-

Great at asynchronous jobs. High availability built-in.

-

Referred to as the “golden standard” by Solomon Hykes, Docker CTO.

-

-

Universal Containerizer

-

abstract away from docker, rkt, kurma?, runc, appc

-

-

Can run multiple frameworks, including Kubernetes and Swarm.

-

Supports multi-tenancy.

-

Good for Big Data shops and job / task-oriented workloads.

-

Good for mixed workloads and with data-locality policies

-

-

Mesos is powerful and scalable, battle-tested

-

Good for multiple large things you need to do 10,000+ node cluster system

-

-

Marathon UI is young, but promising.

-

Still needs 3rd party tools

-

Marathon interface could be more Docker friendly (hard to get at volumes and registry)

-

May need a dedicated infrastructure IT team

-

an overly complex solution for small deployments

-

Summary

A high-level perspective of the container orchestrator spectrum .

Lee Calcote

Thank you. Questions?

clouds, containers, infrastructure,

applications and their management