Introduction to Istio

Decoupling at Layer 5

cloud native and its management

Service Mesh Patterns

Naveen Jain

Our virtual Lab Assistants

Kanishkar J

Shivay Lamba

Connect

Collaborate

Contribute

Join Slack http://slack.layer5.io

Prereq

Confirm Prerequisites

-

Start Docker Desktop, Minikube or other.

(either single-node or multi-node clusters will work) -

Verify that you have a functional Docker environment by running :

$ docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

1b930d010525: Pull complete

Digest: sha256:0e11c388b664df8a27a901dce21eb89f11d8292f7fca1b3e3c4321bf7897bffe

Status: Downloaded newer image for hello-world:latest

Hello from Docker!Docker and Kubernetes

Prereq

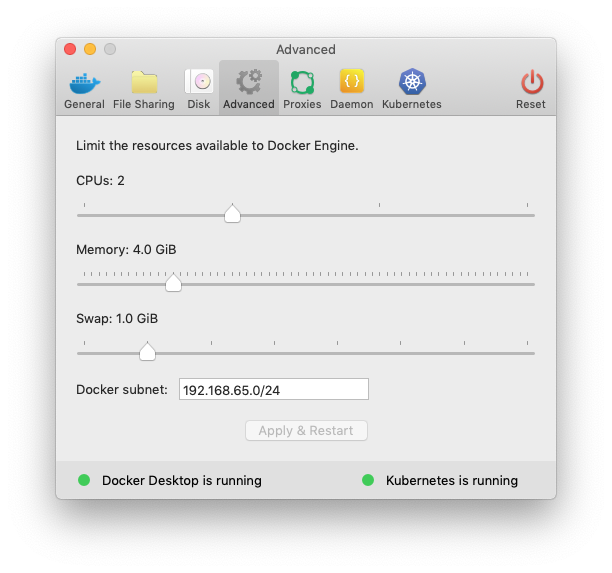

Prepare Docker Desktop

Ensure your Docker Desktop VM has 4GB of memory assigned.

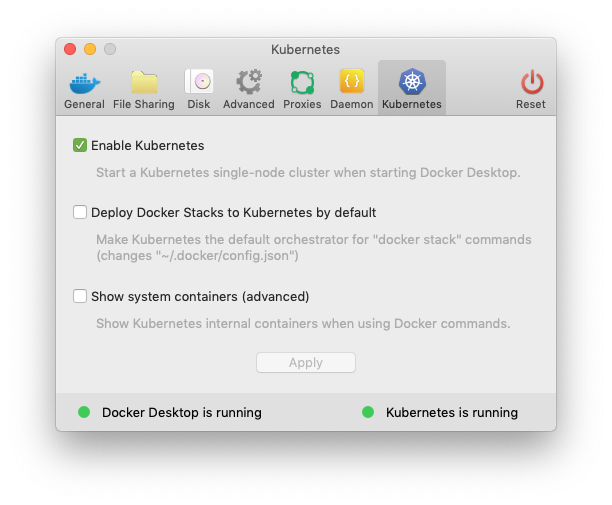

Ensure Kubernetes is enabled.

Prereq

Deploy Kubernetes

- Confirm access to your Kubernetes cluster.

$ kubectl version --short

Client Version: v1.14.1

Server Version: v1.14.1$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

docker-desktop Ready master 10m v1.14.1

Prereq

v1.9 or higher

meshery.io

Deploy Management Plane

Management

Plane

Provides expanded governance, backend system integration, multi-mesh, federation, expanded policy, and dynamic application and mesh configuration.

Control Plane

Data Plane

brew tap layer5io/tap

brew install mesheryctl

mesheryctl startInstall Meshery

Prereq

Using brew

Using bash

curl -L https://git.io/meshery | sudo bash - kubectl config view --minify --flatten > config_minikube.yamlIf using minikube:

What is a Service Mesh?

a dedicated layer for managing service-to-service communication

So, a microservices platform?

obviously.

Orchestrators don't bring all that you need

and neither do service meshes,

but they do get you closer.

partially.

a services-first network

What is Istio?

an open platform to connect, manage, and secure microservices

-

Observability

-

Resiliency

-

Traffic Control

-

Security

-

Policy Enforcement

@IstioMesh

Reviews v1

Reviews Pod

Reviews v2

Reviews v3

Product Pod

Details Container

Details Pod

Ratings Container

Ratings Pod

Product Container

Reviews Service

Ratings Service

Details Service

Product Service

BookInfo Sample App

BookInfo Sample App on Service Mesh

Reviews v1

Reviews Pod

Reviews v2

Reviews v3

Product Pod

Details Container

Details Pod

Ratings Container

Ratings Pod

Product Container

Envoy sidecar

Envoy sidecar

Envoy sidecar

Envoy sidecar

Envoy sidecar

Reviews Service

Enovy sidecar

Envoy ingress

Product Service

Ratings Service

Details Service

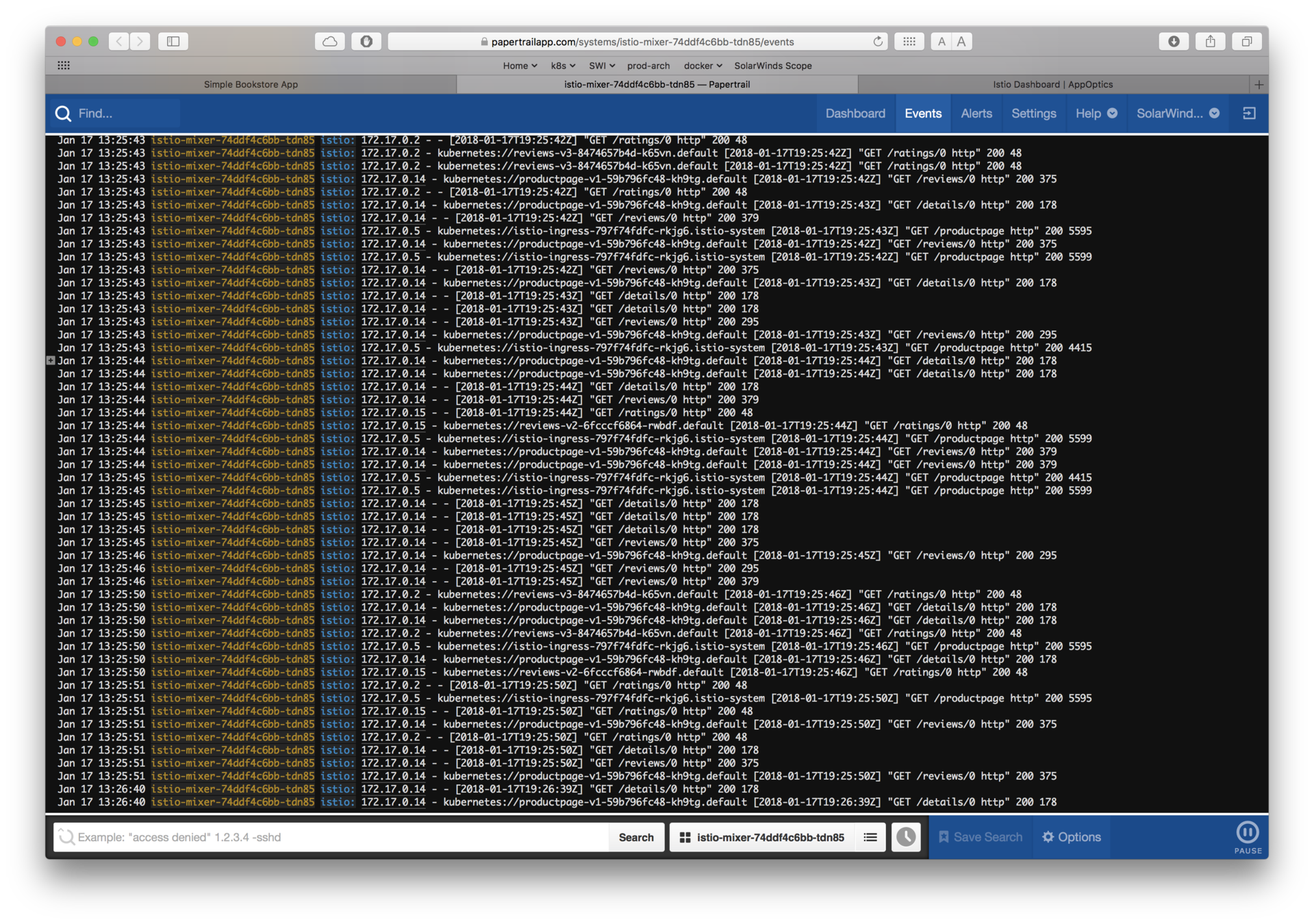

Observability

what gets people hooked on service metrics

Goals

-

Metrics without instrumenting apps

-

Consistent metrics across fleet

-

Trace flow of requests across services

-

Portable across metric back-end providers

You get a metric! You get a metric! Everyone gets a metric!

Traffic Control

control over chaos

- Traffic splitting

- L7 tag based routing?

- Traffic steering

- Look at the contents of a request and route it to a specific set of instances.

- Ingress and egress routing

Resilency

- Systematic fault injection

-

Timeouts and Retries with timeout budget

-

Control connection pool size and request load

-

Circuit breakers and Health checks

content-based traffic steering

Missing: application lifecycle management, but not by much

Missing: distributed debugging; provide nascent visibility (topology)

Relating to

Service Meshes

Which is why...

I have a container orchestrator.

Core

Capabilities

-

Cluster Management

-

Host Discovery

-

Host Health Monitoring

-

-

Scheduling

-

Orchestrator Updates and Host Maintenance

-

Service Discovery

-

Networking and Load Balancing

-

Stateful Services

-

Multi-Tenant, Multi-Region

Additional

Key Capabilities

-

Application Health and Performance Monitoring

-

Application Deployments

-

Application Secrets

minimal capabilities required to qualify as a container orchestrator

Service meshes generally rely on these underlying layers.

Which is why...

I have an API gateway.

Microservices API Gateways

-

Ambassador uses Envoy

-

Kong uses Nginx

-

OpenResty uses Nginx

north-south vs. east-west

Which is why...

I have client-side libraries.

Enforcing consistency is challenging.

Foo Container

Flow Control

Foo Pod

Go Library

A v1

Network Stack

Service Discovery

Circuit Breaking

Application / Business Logic

Bar Container

Flow Control

Bar Pod

Go Library

A v2

Network Stack

Service Discovery

Circuit Breaking

Application / Business Logic

Baz Container

Flow Control

Baz Pod

Java Library

B v1

Network Stack

Service Discovery

Circuit Breaking

Application / Business Logic

Retry Budgets

Rate Limiting

Why use a Service Mesh?

to avoid...

-

Bloated service code

-

Duplicating work to make services production-ready

-

Load balancing, auto scaling, rate limiting, traffic routing...

-

-

Inconsistency across services

-

Retry, tls, failover, deadlines, cancellation, etc., for each language, framework

-

Siloed implementations lead to fragmented, non-uniform policy application and difficult debugging

-

-

Diffusing responsibility of service management

What do we need?

• Observability

• Logging

• Metrics

• Tracing

• Traffic Control

• Resiliency

• Efficiency

• Security

• Policy

...a Service Mesh

DEV

OPS

Decoupling at Layer 5

where Dev and Ops meet

Problem: too much infrastructure code in services

Help with Modernization

-

Can modernize your IT inventory without:

-

Rewriting your applications

-

Adopting microservices, regular services are fine

-

Adopting new frameworks

-

Moving to the cloud

-

address the long-tail of IT services

Get there for free

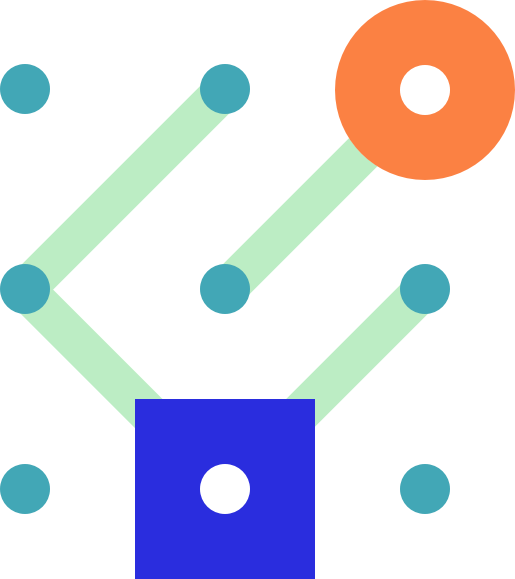

Service Mesh Architectures

Service Mesh Architecture

Data Plane

- Touches every packet/request in the system.

- Responsible for service discovery, health checking, routing, load balancing, authentication, authorization, and observability.

Ingress Gateway

Egress Gateway

Service Mesh Architecture

No control plane? Not a service mesh.

Control Plane

- Provides policy, configuration, and platform integration.

- Takes a set of isolated stateless sidecar proxies and turns them into a service mesh.

- Does not touch any packets/requests in the data path.

Data Plane

- Touches every packet/request in the system.

- Responsible for service discovery, health checking, routing, load balancing, authentication, authorization, and observability.

Ingress Gateway

Egress Gateway

Service Mesh Architecture

Control Plane

Data Plane

- Touches every packet/request in the system.

- Responsible for service discovery, health checking, routing, load balancing, authentication, authorization, and observability.

- Provides policy, configuration, and platform integration.

- Takes a set of isolated stateless sidecar proxies and turns them into a service mesh.

- Does not touch any packets/requests in the data path.

You need a management plane.

Ingress Gateway

Management

Plane

- Provides federation, backend system integration, expanded policy and governance, continuous delivery integration, workflow, chaos, and application performance tuning.

Egress Gateway

Pilot

Citadel

Mixer

Control Plane

Data Plane

istio-system namespace

policy check

Foo Pod

Proxy Sidecar

Service Foo

tls certs

discovery & config

Foo Container

Bar Pod

Proxy Sidecar

Service Bar

Bar Container

Out-of-band telemetry propagation

telemetry

reports

Control flow

application traffic

Application traffic

application namespace

telemetry reports

Istio Architecture

Galley

Ingress Gateway

Egress Gateway

Control Plane

Data Plane

octa-system namespace

policy check

Foo Pod

Proxy

Sidecar

Service Foo

discovery & config

Foo Container

Bar Pod

Service Bar

Bar Container

Out-of-band telemetry propagation

telemetry

reports

Control flow

application traffic

Application traffic

application namespace

telemetry reports

Octarine Architecture

Policy

Engine

Security Engine

Visibility

Engine

+

Proxy

Sidecar

+

Control Plane

Data Plane

linkerd-system namespace

Foo Pod

Proxy Sidecar

Service Foo

Foo Container

Bar Pod

Proxy Sidecar

Service Bar

Bar Container

Out-of-band telemetry propagation

telemetry

scarping

Control flow during request processing

application traffic

Application traffic

application namespace

telemetry scraping

Architecture

destination

Prometheus

Grafana

tap

web

CLI

proxy-api

public-api

Linkerd

proxy-injector

Deploy Istio

- Install Istio using Meshery

- Find control plane namespace

- Inspect control plane services

- Inspect control plane components

open http://localhost:9081kubectl get namespaceskubectl get svc -n istio-systemkubectl get pod -n istio-systemLab 1

github.com/layer5io/istio-service-mesh-workshop

Our service mesh of study: Istio

Pilot

Citadel

Mixer

Control Plane

Data Plane

istio-system namespace

policy check

Foo Pod

Proxy Sidecar

Service Foo

tls certs

discovery & config

Foo Container

Bar Pod

Proxy Sidecar

Service Bar

Bar Container

Out-of-band telemetry propagation

telemetry

reports

Control flow

application traffic

Application traffic

application namespace

telemetry reports

Istio Architecture

Galley

Ingress Gateway

Egress Gateway

Service Proxy Sidecar

- A C++ based L4/L7 proxy

- Low memory footprint

- In production at Lyft™

Capabilities:

- API driven config updates → no reloads

- Zone-aware load balancing w/ failover

- Traffic routing and splitting

- Health checks, circuit breakers, timeouts, retry budgets, fault injection…

- HTTP/2, gRPC, web sockets, tcp

- Transparent proxying

- Designed for observability

the included battery

Data Plane

Pod

Proxy sidecar

App Container

the workhorse

What are Galley and Pilot?

provides service discovery to sidecars

manages sidecar configuration

Pilot

Citadel

the head of the ship

Mixer

istio-system namespace

system of record for service mesh

}

provides abstraction from underlying platforms

Galley

Control Plane

What's Mixer for?

- Point of integration with infrastructure back ends

- Intermediates between Istio and back ends, under operator control

- Enables platform and environment mobility

- Responsible for policy evaluation and telemetry reporting

- Provides granular control over operational policies and telemetry

- Has a rich configuration model

- Intent-based config abstracts most infrastructure concerns

an attribute-processing and routing machine

operator-focused

- Precondition checking

- Quota management

- Telemetry reporting

Pilot

Citadel

Mixer

istio-system namespace

Galley

Control Plane

Mixer

Mixer

Control Plane

Data Plane

istio-system namespace

Foo Pod

Proxy sidecar

Service Foo

Foo Container

Out-of-band telemetry propagation

Control flow during request processing

application traffic

application traffic

application namespace

telemetry reports

an attribute processing engine

What's Citadel for?

-

Verifiable identity

- Issues certs

- Certs distributed to service proxies

- Mounted as a Kubernetes secret

- Secure naming / addressing

- Traffic encryption

security at scale

security by default

Orchestrate Key & Certificate:

- Generation

- Deployment

- Rotation

- Revocation

Pilot

Citadel

Mixer

istio-system namespace

Galley

Control Plane

Q&A

Break

Deploy Sample App

- Multi-language, multi-service application

-

Automatic vs manual sidecar injection

- Verify install

Use Meshery or install manually

Lab 2

Sidecar Injection

Automatic sidecar injection leverages Kubernetes' Mutating Webhook Admission Controller.

- Verify whether your Kubernetes deployment supports these APIs by executing:

- Inspect the istio-sidecar-injector webhook:

If your environment does NOT this API, then you may manually inject the istio sidecar.

Lab 2

github.com/layer5io/istio-service-mesh-workshop

kubectl get mutatingwebhookconfigurations

kubectl get mutatingwebhookconfigurations istio-sidecar-injector -o yamlkubectl api-versions | grep admissionregistrationSidecars proxy can be either manually or automatically injected into your pods.

Deploy Sample App with Automatic Sidecar Injection

- Verify presence of the sidecar injector

Envoy ingress

kubectl -n istio-system get deployment -l istio=sidecar-injectorLab 2

github.com/layer5io/istio-service-mesh-workshop

2. Confirm namespace label

kubectl get ns -L istio-injectionDeploy Sample App with Manual Sidecar Injection

Envoy ingress

In namespaces without the istio-injection label, you can use istioctl kube-inject to manually inject Envoy containers in your application pods before deploying them:

istioctl kube-inject -f <your-app-spec>.yaml | kubectl apply -f -Lab 2

github.com/layer5io/istio-service-mesh-workshop

Expose BookInfo via Istio Ingress Gateway

- Inspecting the Istio Ingress Gateway

- Configure Istio Ingress Gateway for Bookinfo

- Inspect the Istio proxy of the productpage pod

Istio ingress gateway

application traffic

Lab 3

github.com/layer5io/istio-service-mesh-workshop

Telemetry

Core Infrastructure

Initative

Configuration

Security

Telemetry

Control Plane

Data

Plane

service mesh ns

Foo Pod

Proxy Sidecar

Service Foo

Foo Container

Bar Pod

Proxy Sidecar

Service Bar

Bar Container

Out-of-band telemetry propagation

Control flow

application traffic

http / gRPC

Application traffic

application namespace

Meshery Architecture

Ingress Gateway

Egress Gateway

Management

Plane

meshery

adapter

gRPC

kube-api

kube-system

Load Generation

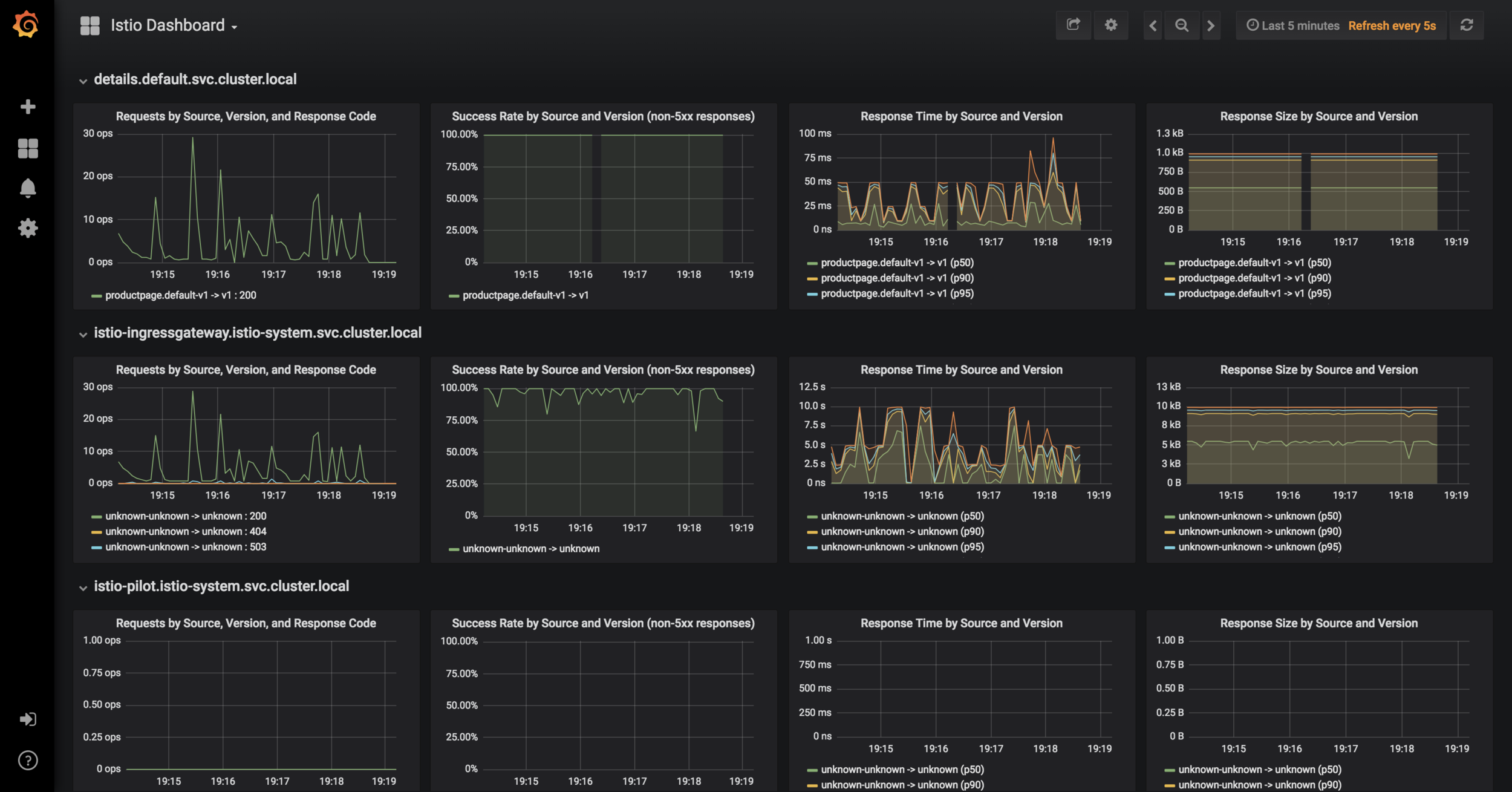

Lab 4

@mesheryio

meshery.io

1. Use Meshery to generate load on Bookinfo

2. View in monitoring tools

Lab 4 Tasks

- Uses pluggable adapters to extend its functionality

- Adapters run within the Mixer process

- Adapters are modules that interface to infrastructure backends

- (logging, metrics, quotas, etc.)

- Multi-interface adapters are possible

- (some adapters send logs and metrics)

Mixer Adapters

Layer5

Prometheus

Stackdriver

Open Policy Agent

Grafana

Fluentd

Statsd

®

Pilot

Citadel

Mixer

istio-system namespace

Galley

Control Plane

types: logs, metrics, access control, quota

Metrics

Mixer

Control Plane

Data Plane

istio-system namespace

Foo Pod

Proxy sidecar

Service Foo

Foo Container

Out-of-band telemetry propagation

Control flow during request processing

application traffic

application traffic

application namespace

telemetry reports

Lab 4

- Expose services with NodePort:

- Find port assigned to Prometheus:

kubectl -n istio-system edit svc prometheuskubectl -n istio-system get svc prometheus

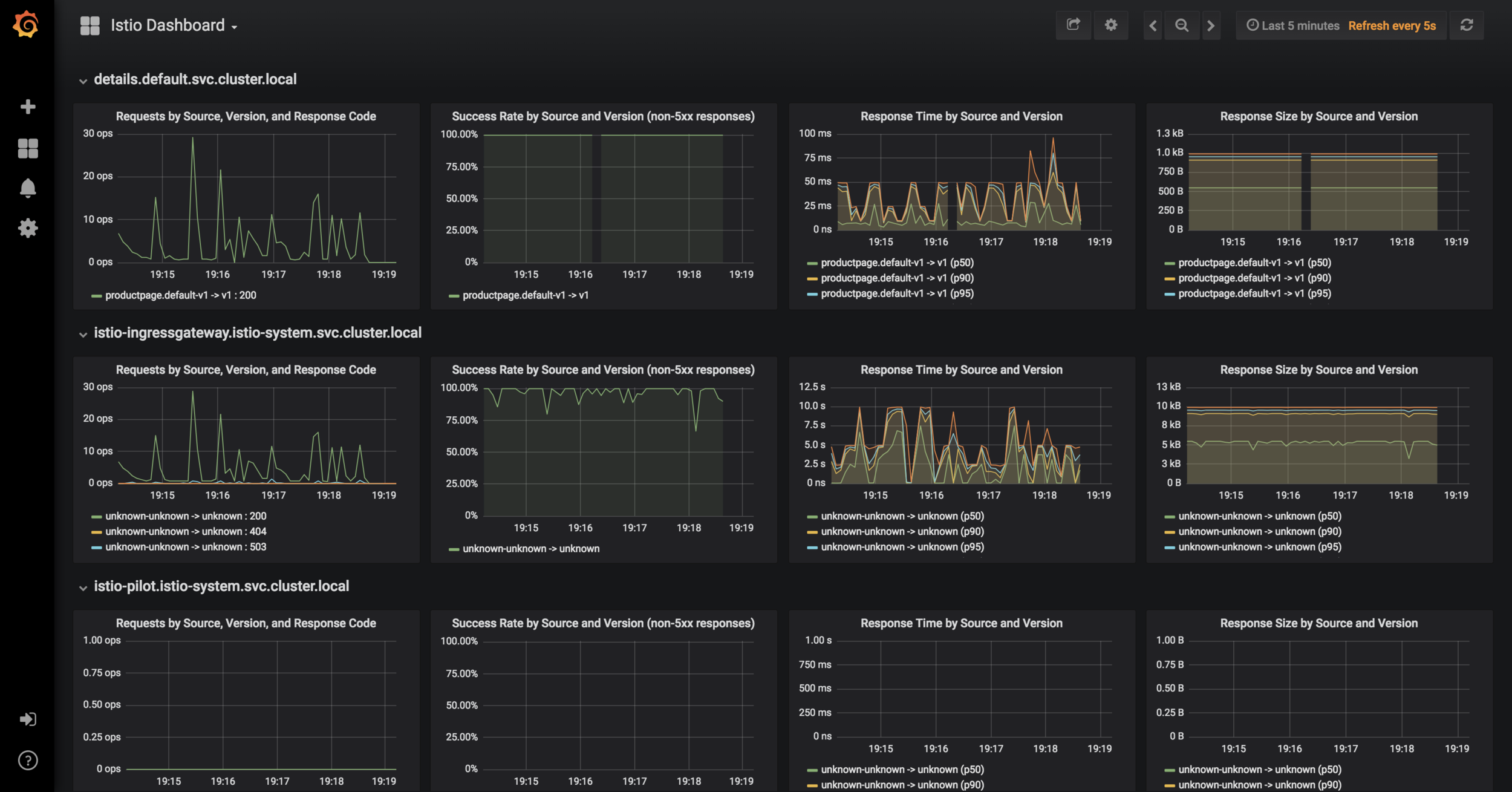

Metrics Dashboard

Lab 4

- Expose services with NodePort:

- Find port assigned to Grafana:

kubectl -n istio-system edit svc grafanakubectl -n istio-system get svc grafana

Grafana

Lab 4

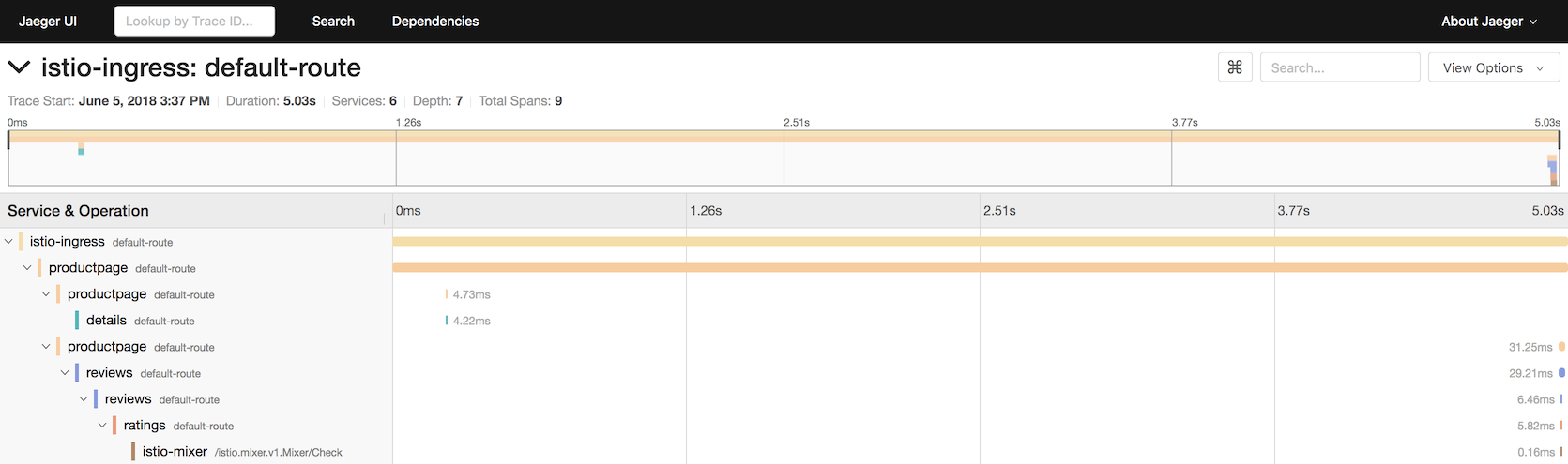

Distributed Tracing

Jaeger

github.com/layer5io/istio-service-mesh-workshop

Distributed Tracing

Jaeger

The istio-proxy collects and propagates the following headers from the incoming request to any outgoing requests:

- x-request-id

- x-b3-traceid

- x-b3-spanid

- x-b3-parentspanid

- x-b3-sampled

- x-b3-flags

- x-ot-span-context

Lab 4

- Expose services with NodePort:

- Find port assigned to Jaeger:

kubectl -n istio-system edit svc tracingkubectl -n istio-system get svc tracing

github.com/layer5io/istio-service-mesh-workshop

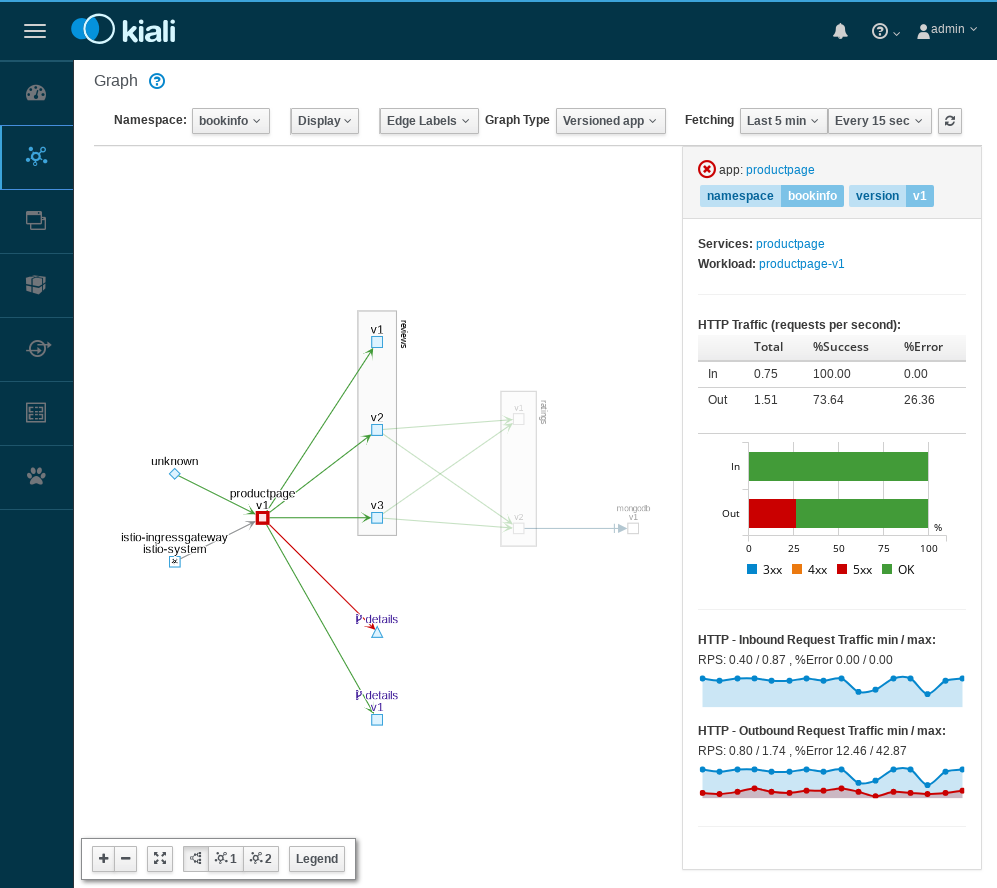

Services Observation

Kiali

Lab 4

- Expose services with NodePort:

- Find port assigned to Kiali:

kubectl -n istio-system edit svc kialikubectl -n istio-system get svc kiali

github.com/layer5io/istio-service-mesh-workshop

Q&A

Break

Request Routing and Canary Testing

- Configure the default route for all services to V1

- Content based routing

- Canary Testing - Traffic Shifting

kubectl apply -f samples/bookinfo/networking/destination-rule-all-mtls.yamlLab 5

Timeouts & Retries

Web

Service Foo

Timeout = 600ms

Retries = 3

Timeout = 300ms

Retries = 3

Timeout = 900ms

Retries = 3

Service Bar

Database

Timeout = 500ms

Retries = 3

Timeout = 300ms

Retries = 3

Timeout = 900ms

Retries = 3

Deadlines

Web

Service Foo

Deadline = 600ms

Deadline = 496ms

Service Bar

Database

Deadline = 428ms

Deadline=180ms

Elapsed=104ms

Elapsed=68ms

Elapsed=248ms

Fault Injection and Rate-Limiting

- Inject a route rule to create a fault using HTTP delay

- Inject a route rule to create a fault using HTTP abort

- Verify fault injection

Lab 6

Circuit Breaking

- Deploy a client for the app

- With manual sidecar injection:

- Initial test calls from client to server

- Time to trip the circuit

Lab 7

Mutual TLS & Identity Verification

- Verify mTLS

- Understanding SPIFFE

Lab 8

Lee Calcote

cloud native and its management

layer5.io/subscribe

Service Mesh Deployment Models

If we have time...

Client

Edge Cache

Istio Gateway

(envoy)

Cache Generator

Collection of VMs running APIs

service mesh

Istio VirtualService

Istio VirtualService

Istio ServiceEntry

Situation:

- existing services running on VMs (that have little to no service-to-service traffic).

- nearly all traffic flows from client to the service and back to client.

Benefits:

- gain granular traffic control (e.g path rewrites).

- detailed service monitoring without immediately deploying a thousand sidecars.

Ingress

Out-of-band telemetry propagation

Application traffic

Control flow

Proxy per Node

Service A

Service A

Service A

linkerd

Node (server)

Service A

Service A

Service B

linkerd

Node (server)

Service A

Service A

Service C

linkerd

Node (server)

Advantages:

-

Less (memory) overhead.

-

Simpler distribution of configuration information.

-

primarily physical or virtual server based; good for large monolithic applications.

Disadvantages:

-

Coarse support for encryption of service-to-service communication, instead host-to-host encryption and authentication policies.

-

Blast radius of a proxy failure includes all applications on the node, which is essentially equivalent to losing the node itself.

-

Not a transparent entity, services must be aware of its existence.

Configuration

Security

Telemetry

Control Plane

Data

Plane

service mesh ns

Foo Pod

Proxy Sidecar

Service Foo

Foo Container

Bar Pod

Proxy Sidecar

Service Bar

Bar Container

Out-of-band telemetry propagation

Control flow

Application traffic

application namespace

Istio v1.0 Multi-Cluster

Ingress Gateway

kube-api

kube-system

Barz Pod

Proxy Sidecar

Service Bar

Baz Container

application namespace

kube-api

kube-system

LOCAL CLUSTER

REMOTE CLUSTER

Egress Gateway

injector

Shared Root CA

layer5.io/books

Advantages:

-

Good starting point for building a brand-new microservices architecture or for migrating from a monolith.

Disadvantages:

-

When the number of services increase, it becomes difficult to manage.

Router "Mesh"

Fabric Model

Advantages:

-

Granular encryption of service-to-service communication.

-

Can be gradually added to an existing cluster without central coordination.

Disadvantages:

-

Lack of central coordination. Difficult to scale operationally.

Ingress or Edge Proxy

Advantages:

-

Works with existing services that can be broken down over time.

Disadvantages:

-

Is missing the benefits of service-to-service visibility and control.